From COVID Crisis to ChatGPT: How Urgent Evidence Needs Led to Rigorous AI Evaluation

Published in Computational Sciences, Biomedical Research, and Public Health

At the height of the COVID-19 pandemic, systematic reviewers at the Norwegian Institute of Public Health (NIPH) were overwhelmed with health authorities’ needs for updated evidence to help them decide on infection prevention and control policies. Traditional review methods couldn't keep pace. For example, health authorities needed to know within a week whether they should close schools, not 12 to 24 months later, the traditional timeframe for important evidence syntheses. That pushed us to try something new.

We began experimenting with machine learning (ML) tools to support evidence synthesis, hoping to work faster without compromising quality. Results were promising enough that we established a dedicated ML team—not only to continue emergency-era innovation, but to embed ML into our routine evidence synthesis work.

This work has continued post-pandemic through several team iterations. While each had slightly different aims, all combined three core activities: horizon scanning to spot promising tools and technologies early; evaluation work to assess automated tools' benefits and harms; and implementation work to help reviewers adopt such tools effectively.

Through horizon scanning, we were quick to identify the potential of generative artificial intelligence (AI) and large language models (LLMs). However, evidence synthesis is highly consequential—NIPH's commissioned work directly influences Norwegian healthcare and welfare systems and the systematic reviews it publishes via Cochrane and in other journals can inform decision making globally. Adopting new tools without understanding how well they work would be unwise. We therefore designed a rigorous comparison of human and LLM performance on a core evidence synthesis task.

Why Risk of Bias Assessment?

When synthesizing evidence, systematic reviewers don't just summarize results—we assess how trustworthy they are. Risk of bias (RoB) assessment examines whether trial design, conduct, or reporting could systematically distort findings. This means asking questions like: Was randomization robust? Were participants and investigators blinded? Were outcome data complete and fully reported? A trial that is at risk of bias is likely to over- or underestimate benefits and harms. Thus a RoB assessment helps reviewers and stakeholders use evidence appropriately. Unfortunately, RoB assessment is time-consuming and best practice requires that at least two highly trained, experienced researchers assess papers and come to a consensus on a trial's risk of bias.

RoB assessment was an ideal test case for comparing human and LLM performance: it's clearly defined, labor-intensive, and could potentially be completely automated—if LLMs can perform well enough.

We therefore wrote a detailed protocol describing our design, analysis plan, and how we would interpret and report results.

Our Approach

The generative AI field moves rapidly, but academic publishing does not. We posted our protocol as a preprint, committing to publish whatever we found, and submitted it to BMC Medical Research Methodology for peer review, where it was recently published.

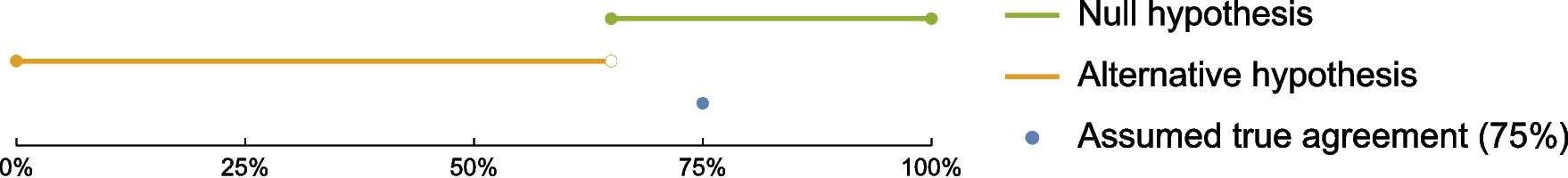

We planned to randomly select 100 Cochrane systematic reviews and one randomized trial from each that had been assessed for RoB by at least two human reviewers. We would develop the best prompt for ChatGPT that we could, using 25 pairs of reviews and trials to assess how well the LLM’s RoB assessments agreed with the human reviewers. We would then apply that best-performing prompt to the remaining 75 trials, again comparing ChatGPT's assessments with human assessments, to obtain our final estimate of agreement.

The protocol development attracted coauthors from across Norway and researchers with affiliations in Sweden, the UK, Canada, and Colombia.

The Prespecification Dilemma

We weren't alone in spotting this research opportunity. While we carefully followed our protocol, other teams began publishing studies on human-LLM agreement in RoB assessment. However, unlike our work, these studies were generally not prespecified. Some reported excellent agreement; others the opposite. This heterogeneity may reflect genuine differences in study objectives, but some likely results from lack of prespecification—for example, iteratively changing methods after seeing the data. Systematic reviewers familiar with the Cochrane RoB2 tool will recognize this as a "risk of bias in selection of the reported result". Prespecification in an openly available protocol is considered best practice, and the considerable lack of prespecification highlights that much research in this area should be considered exploratory.

Publishing a protocol in a high-interest field is risky because it exposes plans to potential competitors. But it can also encourage collaboration, strengthen research, and reduce duplicated effort to minimize research waste.

A Call for Better Incentives

Those relying on evidence syntheses should be confident that synthesis methods are fit for purpose and that the evidence underpinning the adoption of those methods is sound. Unfortunately, incentives to publish first discourage prespecification and peer review.

More journals should adopt the Registered Report model: fast-track peer reviews of protocols and guarantee rapid publication of results papers, whatever the findings, provided authors report and justify any deviations from the published plan. Funders of methodological research could explicitly favor grant proposals that plan to prespecify and publish protocols from applicants with track records of prespecification, and could release funding in tranches, contingent on publishing protocols for subsequent research. Universities and research institutes could weigh prespecification in hiring and promotion.

The stakes are high. If LLMs can reliably perform RoB assessment or other evidence synthesis tasks, they could free human reviewers for higher-value work, accelerate review production, and—most importantly—help get safe, effective, affordable treatments into routine use sooner. But only rigorous evaluations can determine the degree to which LLMs help and harm, and what is traded off when we adopt them.

Our results are now in, and we look forward to sharing them soon. Our work on this study has reinforced our conviction that in fast-moving fields like AI applications in research, the academic community needs better mechanisms to balance speed with rigor—and that prespecification and appropriate research methods constitute a solid defense against the temptation of being misled by our own enthusiasm for promising new tools.

Follow the Topic

-

BMC Medical Research Methodology

This is an open access, peer-reviewed journal publishing original research articles in methodological approaches to healthcare research.

Related Collections

With Collections, you can get published faster and increase your visibility.

Causal inference and observational data vol. 2

BMC Medical Research Methodology is calling for submissions to our Collection on causal inference methods using observational data in medical contexts.

Randomized controlled trials represent the methodological gold standard for establishing causal effects; however, their implementation is frequently constrained by ethical, logistical, or practical limitations in many real-world health contexts. In such circumstances, observational studies constitute a critical source of empirical evidence. Drawing valid causal conclusions from such observational data presents unique challenges that demand rigorous methods.

This Collection aims to advance the methodological foundations and practical applications of causal inference using observational data in health and medical research. We invite submissions that explore novel statistical, computational, and conceptual approaches to causal inference in observational settings, with a focus on improving transparency, reproducibility, and robustness in medical research. Contributions may span theoretical developments, simulation studies, applied analyses, and software tools that facilitate causal reasoning. Topics of interest include, but are not limited to:

Methodological advances in causal inference frameworks (e.g., potential outcomes, structural causal models, graphical models)

Bias mitigation techniques, including confounding control, selection bias, and measurement error

Causal discovery algorithms and machine learning approaches for observational datasets

Novel implementation and rigorous comparisons of various propensity score-based methods for confounding adjustment

Instrumental variable approaches and natural experiments

Sensitivity analyses and quantitative bias analysis

Comparative effectiveness research using real-world data

Applications in epidemiology, health services research, and clinical decision-making

Reporting standards and transparency tools for causal inference studies

We particularly encourage submissions that demonstrate real-world relevance, address methodological gaps, or propose interdisciplinary solutions bridging statistics, computer science, and biomedical sciences.

All manuscripts submitted to this journal, including those submitted to collections and special issues, are assessed in line with our editorial policies and the journal’s peer review process. Reviewers and editors are required to declare competing interests and can be excluded from the peer review process if a competing interest exists.

Publishing Model: Open Access

Deadline: Jul 30, 2026

Infodemics

BMC Medical Research Methodology is calling for submissions to our Collection on the impact of rapid spread of either accurate or misleading information on medical research during public health crises, which can become a critical challenge in global health.

Medical research plays a role but is not the only actor in determining the public perception of the value of research, its findings, and the way these are reported in published form and shape public health policies; rather, medical researchers act alongside science communicators, social media, professional journalists, public institutions, just to name a few, able to influence the information landscape and the way scientific findings are used and disseminated within and outside of the scientific community.

As the global community is facing major challenges due to the spreading or new or old infectious diseases, a deluge of information, including misleading or false content is spreading even faster through the internet, social networks, and the media, dangerously altering risk perceptions and disseminating false information about diseases, their causes and dynamics, as well as potential treatments. The surge of excessive, false, or misleading information may pose new and serious threats to global health that should be adequately tackled through major research efforts and carefully targeted, evidence-based policy interventions.

This collection seeks to explore different approaches to understanding, preventing, managing, and mitigating the impact of infodemics on public health outcomes. While the main focus is on infectious diseases, the collection also welcomes studies on any other conditions or health-related practices that are commonly the target of misinformation or subject of misconception.

We invite contributions that address a wide range of topics, including but not limited to:

Methodological frameworks for studying the dynamics of infodemics in digital and physical environments

Strategies for enhancing the resilience of public health systems to infodemics and misinformation and disinformation

The role of interdisciplinary approaches in developing evidence-based interventions for infodemic management

Case studies highlighting the impact of infodemics on health behaviors, policy-making, and trust in health authorities

Technological innovations and tools for monitoring, analyzing, and countering infodemics

All manuscripts submitted to this journal, including those submitted to collections and special issues, are assessed in line with our editorial policies and the journal’s peer review process. Reviewers and editors are required to declare competing interests and can be excluded from the peer review process if a competing interest exists.

Publishing Model: Open Access

Deadline: Apr 05, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in