Frontal Cortex Integrates Visual Goals and Landmarks to Aim Gaze Movements

Published in Neuroscience

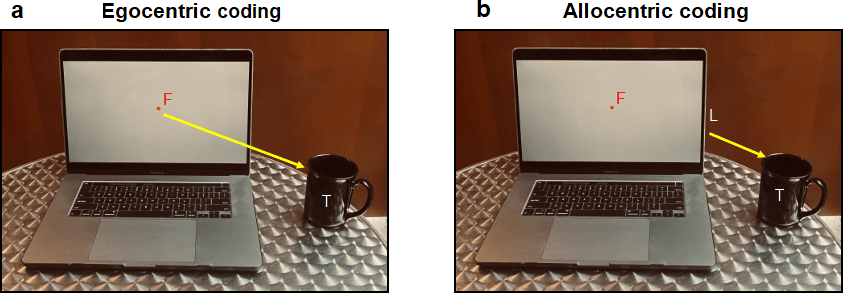

To localize an object, the visual system can either use egocentric cues, (e.g., object location relative to the direction of visual gaze), or allocentric cues (i.e., object location relative to some external landmark) (Figure 1). People can be instructed to look or reach relative to one of these cues, but behavioural experiments have shown that usually the brain automatically weighs them ‘optimally’, depending on their relative reliability 1,2. Ultimately, visual codes must be converted into egocentric commands, e.g., to move the eye relative to the head or the arm relative to the body. This poses a fundamental question: how does the brain integrate egocentric and allocentric cues to generate accurate action plans?

Figure 1: Two ways for the brain to code a visual target (T) i.e., a coffee cup while looking at a computer screen. (a)Egocentric coding: the target is coded relative to some part of the body, in this case, the fovea at the back of the eye, which corresponds to the point of gaze fixation (F) on the screen. (b) Allocentric coding: the encoding of visual information relative to an external landmark (L), such as the edge of the screen.

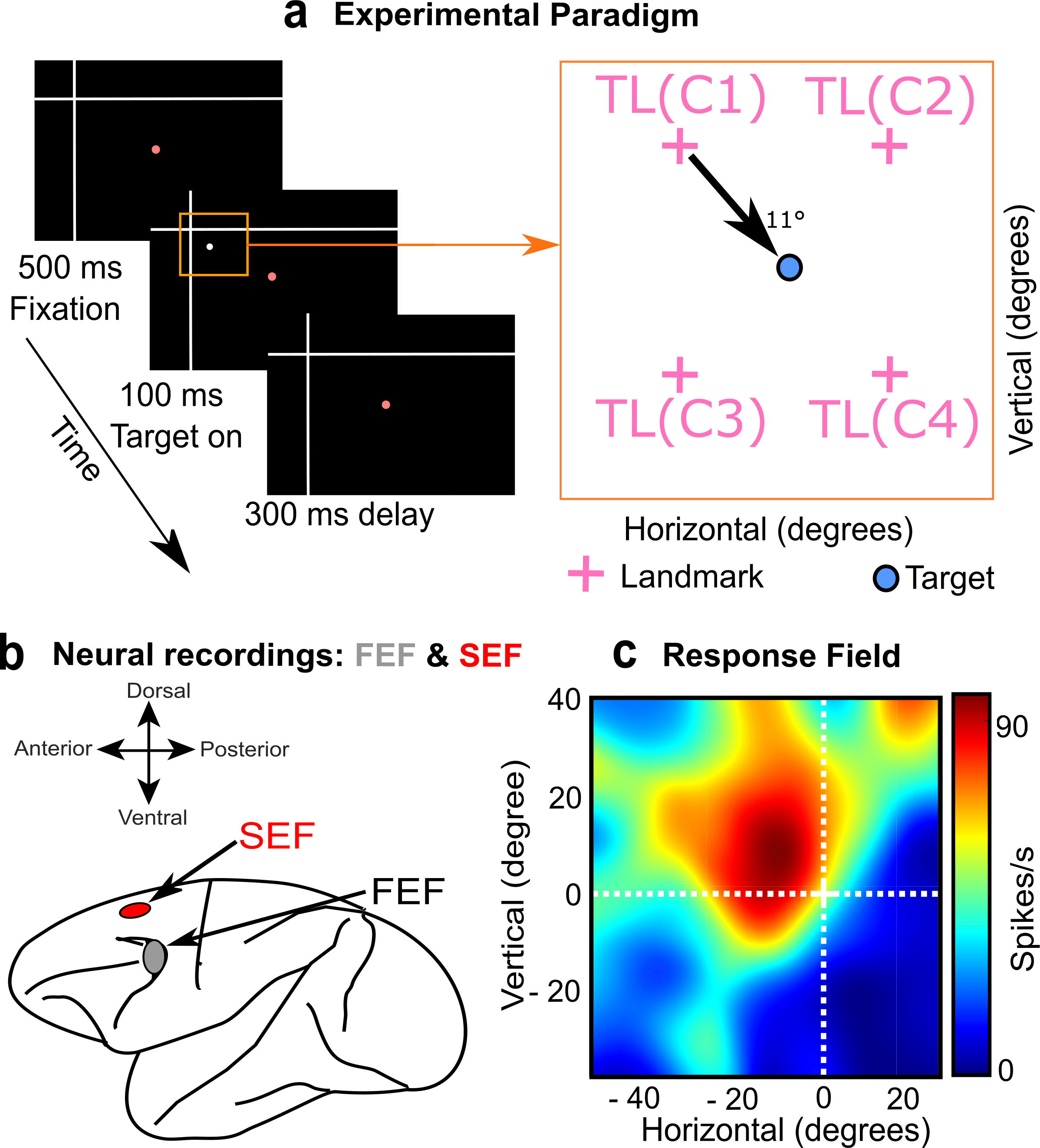

Neuroscientists think the visual system divides egocentric and allocentric information into the dorsal and ventral cortical streams, respectively 3,4. However, it is not known where or how this information merges for action planning, i.e., between those streams or in the frontal cortex areas for action. To test this, Schütz et al. (2023) showed potential saccade targets to rhesus macaques in the presence of visual landmarks (Figure 2a), while simultaneously recording activity in two frontal cortex gaze control areas (Figure 2b), the frontal (FEF) and supplementary eye fields (SEF) 5. Our novel results were that visual responses in these areas encoded both saccade targets and visual landmarks, and neurons that coded both showed a shift from gaze-centered to landmark-centered coding.

Figure 2: Methods used in the study. (a) Left: Rhesus Macaques fixated gaze on a central stimulus (red dot) with a large landmark in the background (white cross). Right: Then a potential saccade target appeared in one of four quadrants relative to the landmark, resulting in 4 different target-landmark configurations (TLC1-4, right panel). (b) Simultaneously, we measured visual responses in the FEF and SEF. (c) Doing this throughout the visual field allowed us to reconstruct the visual response field of the neuron to the target (heat map) and then analyze how this was modulated by the landmark.

The path to obtaining these results was not simple. First, Vishal Bharmauria (a Research Associate @ Crawford lab, York University) spent many months recording the data and characterizing visual ‘response fields’ (the area of space that activates a neuron) from two macaque monkeys (Figure 2c). Overall, the visual response fields preferentially coded target location relative to the initial gaze fixation. Therefore, we focused on later parts of the task (not shown here), where shifts in the visual landmark produced modulations in FEF and SEF motor activity that were reflected in gaze behavior 6,7. This explained the motor weighting of ego / allocentric cues observed previously 1,2, but could not explain how the corresponding visual inputs were integrated for normal behavior.

Next, entered Adrian Schütz into the picture, a Neurophysics PhD student from the Bremmer lab in Marburg, through a collaboration supported by the Brain in Action International Research Group. While visiting the Crawford lab, Adrian developed novel ways of testing ‘intermediate coordinate systems’ and re-examined our visual response field data. He found that many visual responses showed subtle shifts toward landmark-centered target codes. This trend got stronger when he divided the data into different target-landmark configurations (Figure 2a, right panel), probably because their various influences tended to cancel out when they were pooled together.

We thought this result was interesting enough to submit as a paper to the current journal. The editorial feedback was mostly positive but prompted us to take a deeper look into our results. This was slow going, because now there was a global pandemic, the authors were separated across the Atlantic Ocean, and the first author had started a new job and a family. Nevertheless, we kept at it, and found a new result: not only were neurons in both areas encoding target location, some (30% in FEF) preferentially coded landmark location. Indeed, most showed intermediate codes between the target and landmark.

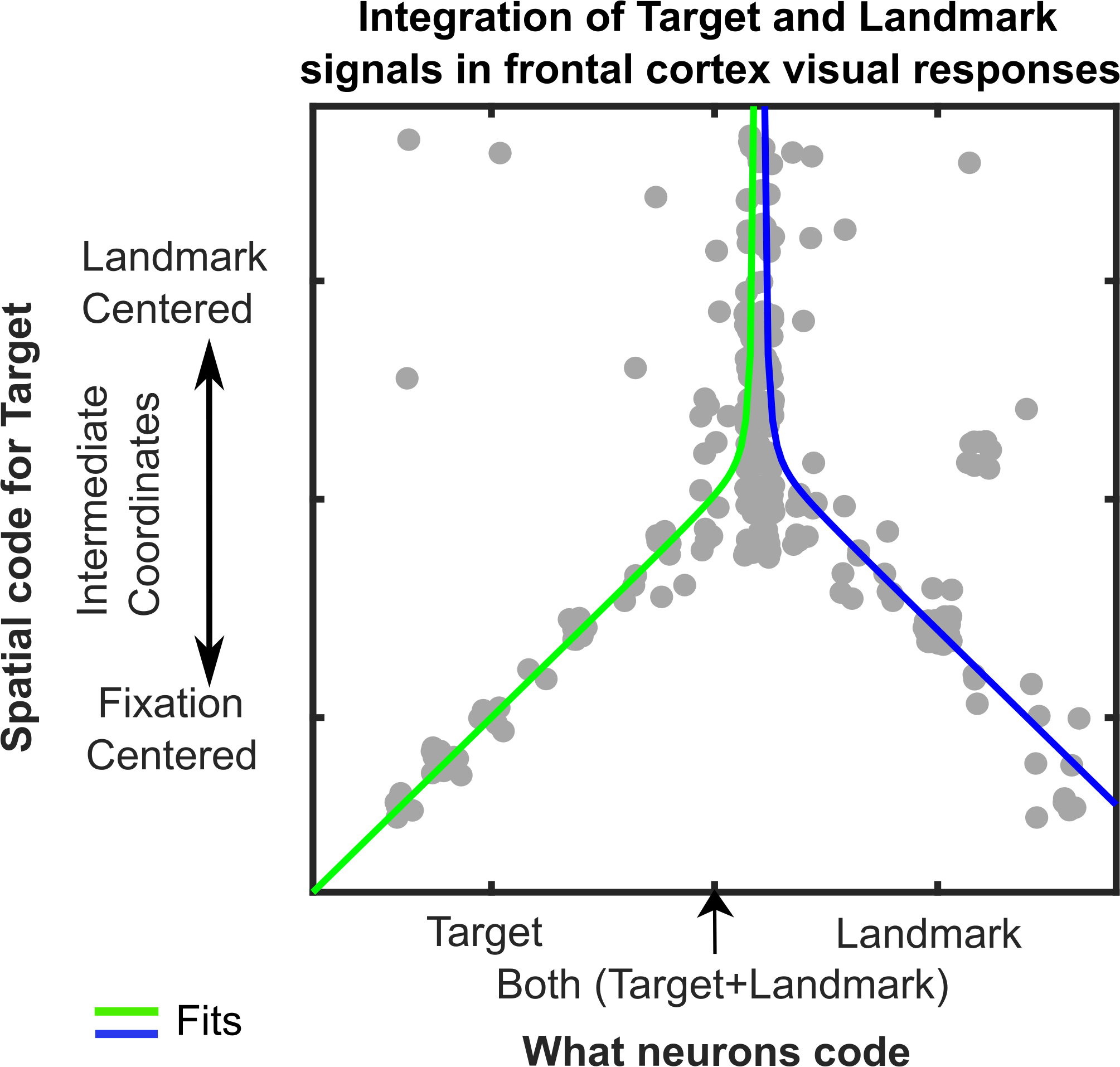

This novel finding led to a third result that was even more intriguing. We reasoned that cells that integrate target and landmark information might show a shift toward landmark-centered target coding, whereas cells that only code target or landmark location might not show this shift. To test this, we plotted the intermediate coordinate system score (fixation- to landmark-centered) as a function of our parameter code score (target to landmark code) for both areas (only FEF shown below). The pattern was even stronger than we expected: the inverted ‘Y’ reported in our paper (Figure 3). In other words, in both areas landmark-centered coding rose and peaked in cells that coded targets and landmarks.

Figure 3: Integration of Target and Landmark codes (Horizontal Axis) is associated with shifts in target coding from Fixation-Centered toward a Landmark-Centered scheme (Vertical Axis). Each grey dot represents data fit to the response field for one target-landmark configuration from one neuron. Line fits suggest the effect peaks and asymptotes near where the target and landmark coding are equal.

These results show that visual processing is not complete in the traditional ‘visual’ areas of the brain. Visuospatial information can be fully integrated in frontal areas associated with cognition and movement control, presumably to provide the optimal integration described above. Further, we identified a specific cellular mechanism. It is likely that the brain does such ‘last minute’ computations to allow for the flexible, intelligent use of vision for complex behaviors. We predict that other behaviors may utilize similar mechanisms. These mechanisms likely also have implications for understanding the neurological deficits associated with degradation of dorsal vs. ventral visual areas 3,4 as well as direct damage to frontal cortex.

- Fiehler, K. & Karimpur, H. Spatial coding for action across spatial scales. Nat Rev Psychol 2, 72–84 (2023).

- Chen, Y. & Crawford, J. D. Allocentric representations for target memory and reaching in human cortex. Annals of the New York Academy of Sciences 1464, 142–155 (2020).

- Milner, D. & Goodale, M. The Visual Brain in Action. (Oxford University Press, 2006). doi:10.1093/acprof:oso/9780198524724.001.0001.

- Schenk, T. No Dissociation between Perception and Action in Patient DF When Haptic Feedback is Withdrawn. J. Neurosci. 32, 2013–2017 (2012).

- Schall, J. D. Visuomotor Functions in the Frontal Lobe. Annual Review of Vision Science 1, 469–498 (2015).

- Bharmauria, V. et al. Integration of Eye-Centered and Landmark-Centered Codes in Frontal Eye Field Gaze Responses. Cereb Cortex 30, 4995–5013 (2020).

- Bharmauria, V., Sajad, A., Yan, X., Wang, H. & Crawford, J. D. Spatiotemporal Coding in the Macaque Supplementary Eye Fields: Landmark Influence in the Target-to-Gaze Transformation. eNeuro 8, ENEURO.0446-20.2020 (2021).

Follow the Topic

-

Communications Biology

An open access journal from Nature Portfolio publishing high-quality research, reviews and commentary in all areas of the biological sciences, representing significant advances and bringing new biological insight to a specialized area of research.

Your space to connect: The Psychedelics Hub

A new Communities’ space to connect, collaborate, and explore research on Psychotherapy, Clinical Psychology, and Neuroscience!

Continue reading announcementRelated Collections

With Collections, you can get published faster and increase your visibility.

From RNA Detection to Molecular Mechanisms

Publishing Model: Open Access

Deadline: May 05, 2026

Signalling Pathways of Innate Immunity

Publishing Model: Hybrid

Deadline: May 31, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in