Med-BERT: Pre-trained Embedding for Structured EHR

Published in Healthcare & Nursing

Frankly, structured electronic health records (EHRs) are not the favorite data modality for deep learning. At least not yet.

One of the reasons is that, unlike images and natural languages where large training data are freely available, very large collection of EHRs are not accessible for many. This will hamper the performance of predictive modeling for individual hospitals, who often only can access their own samples, which are small for deep learning standard.

Our work addresses this issue by adapting the popular NLP framework, BERT, for EHRs. The BERT is a masked autoencoder transformer model that is pre-trained using a very large data set. While the pre-training is difficult and expensive, the pre-trained model can be fine-tuned to deliver state-of-the-art results for a variety of natural language tasks, with even a smaller data set and relatively easy and cheap computation. However, the BERT pre-training-fine-tuning methodology has not been convincingly demonstrated at structured EHR data yet.

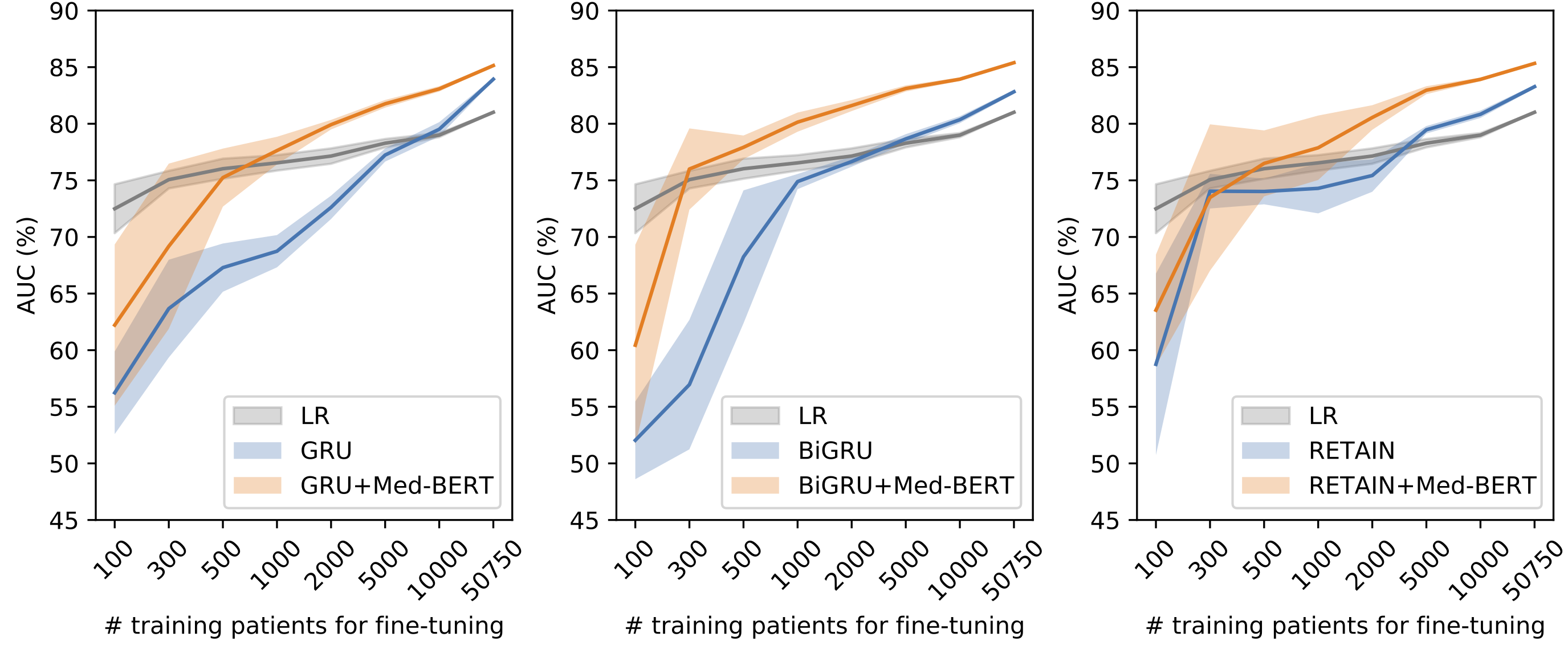

In this work, we developed Med-BERT, BERT model for structured EHR data. We pre-trained a 17 million parameter (not large for NLP standard, but quite big for structured EHR) transformer model using a 28 million patient data set. We showed Med-BERT substantially improves the prediction accuracy, boosting the area under the receiver operating characteristics curve (AUC) by 1.21-6.14% for the tasks we tested. In particular, pre-trained Med-BERT boosts performances for small fine-tuning training sets, equivalent to boosting the training set for about 10 times.

Overall Med-BERT proves the concept that the BERT methodology is applicable to structured EHR data. Sharing of pre-trained Med-BERT models will benefit disease-prediction studies with small local training datasets, reduce data collection expenses, and accelerate the pace of artificial intelligence aided healthcare. Our work is available at doi: 10.1038/s41746-021-00455-y.

Follow the Topic

-

npj Digital Medicine

An online open-access journal dedicated to publishing research in all aspects of digital medicine, including the clinical application and implementation of digital and mobile technologies, virtual healthcare, and novel applications of artificial intelligence and informatics.

Related Collections

With Collections, you can get published faster and increase your visibility.

Digital Health Equity and Access

Publishing Model: Open Access

Deadline: Mar 03, 2026

Evaluating the Real-World Clinical Performance of AI

Publishing Model: Open Access

Deadline: Jun 03, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in