Neuro-inspired AI keeps its eye on what's odd

Published in Computational Sciences

Biological systems are very flexible and efficient. Our brain observes the world through a series of eye movements, called saccades. These saccades provide the capability to solve tasks involving numerous configurations and locations of inputs to be examined, for instance when navigating a busy day with a digital calendar, messages, and notifications on various devices, or simply shopping for the best treats at a vibrant local market. They also ensure high efficiency by focusing on locations relevant to the task rather than processing everything in the same manner as occurs in current AI systems. Furthermore, biological neurons are more intricate than the simple abstract neurons commonly used in deep learning. They possess rich, inherently temporal, neural dynamics and communicate via highly efficient sparse spikes of voltage. Computational neuroscientists, who focus on understanding how the brain works, model them as so-called spiking neural networks (SNNs).

At IBM Research, we are exploring neuro-inspired models, aiming to develop sustainable efficient AI. We keep asking: “Do AI models have to be large and energy hungry?” The answer may lie in important aspects that biology can teach us.

As reported in Nature Communications, we have proposed a neuro-inspired architecture that solves analytic intelligence riddles by taking inspiration from how the brain works. The network observes the riddles through synthetic eye movements and processes the inputs using neurons with temporal dynamics inspired by the biological neurons, achieving higher accuracy with fewer parameters than common artificial neural networks (ANNs). These results demonstrate that there is a huge potential to learn from the brain and enhance contemporary artificial intelligence (AI) with biologically inspired features.

Introducing a saccadic architecture

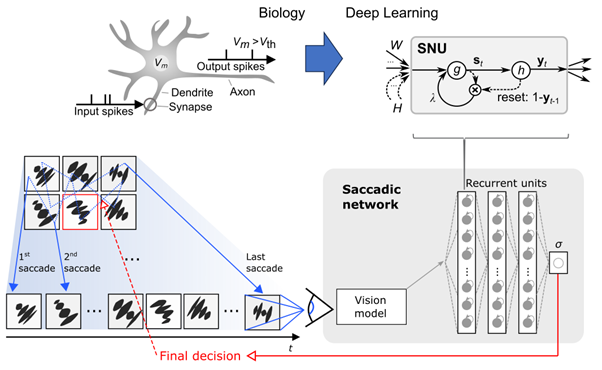

Our proposed architecture combines neuro-inspired dynamics with synthetic saccades, as schematically depicted in the figure below.

The neural dynamics are modeled following the Spiking Neural Unit (SNU) framework. SNUs imitate the biological process of collecting the incoming information from the dendrites that is then reflected in the membrane potential changes inside the soma of a neuron. When enough supporting information is collected over time, the neuron emits a spike at its axon and resets its internal state, so that the process can start over again. We use the open source IBM NeuroAI Toolkit framework that incorporates these dynamics into the standard deep learning frameworks. It unleashes the capabilities of neuro-inspired AI for machine learning, recently demonstrated in works solving classification, prediction, and speech recognition tasks with substantial efficiency benefits. The inputs to the inherently temporal SNUs are provided by synthetic saccades. The model observes different input locations, initially processes them through a vision model based on a convolutional neural network inspired by the visual cortex, and then performs reasoning using SNUs.

Solving visual oddity riddles

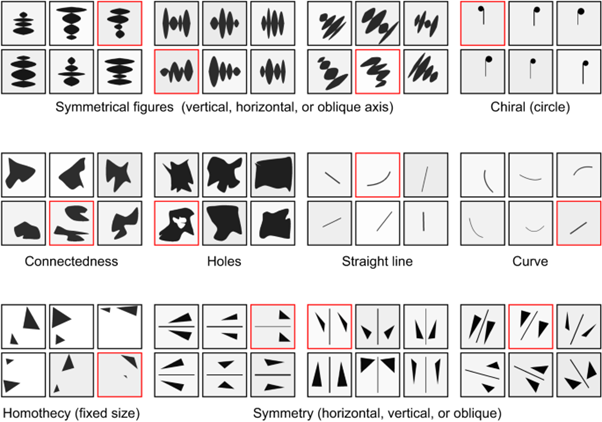

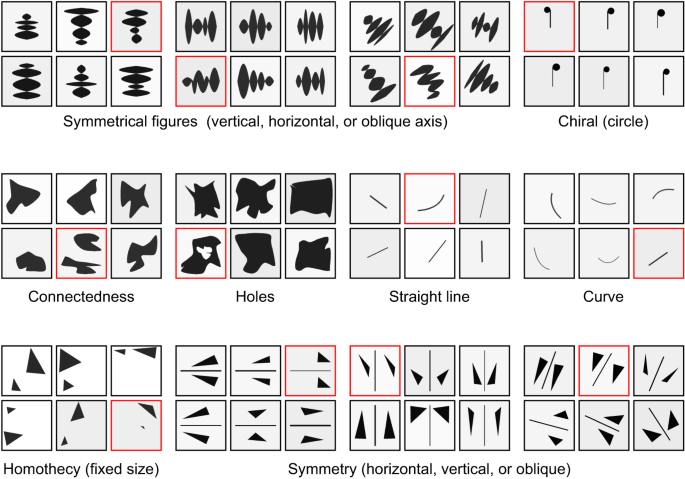

To evaluate the capabilities of our architecture in practice, we applied it to the visual oddity task – a well-known analytic reasoning problem used by cognitive neuroscientists to assess cross-cultural visual reasoning skills. The task comprises different riddles, whereby each one is designed to test the comprehension of concepts such as points, lines, parallelism, and symmetry. Should the lines be straight, curved, short, or maybe long? It all depends on the context and requires relational reasoning skills to understand each riddle. The goal is to identify the one frame among the given six frames that violates a certain concept being tested. A few examples of riddles, generated with our open-sourced generator accompanying the publication, are illustrated below along with the labels and the “odd” images marked with red boundaries. The human or, in our case, an AI being tested, is of course given only the six frames without any information on the concepts being tested.

We compared the accuracy of solutions as a function of the model size for the following three cases: Oddity Relational Network (OReN), which is the state-of-the-art deep learning relational network approach; a saccadic network using common deep learning units – LSTMs; and a fully neuro-inspired architecture with saccades and neural dynamics implemented with SNUs. For models capable of solving a single category of riddles, the best OReN and LSTM models achieved ≈98% while requiring over 1 million parameters. Remarkably, the smallest saccadic SNU model achieved a higher accuracy of ≈99% at only ≈0.15 million parameters. For models capable of solving all riddle categories in a single network, the best OReN and LSTM models achieved up to ≈96% at ≈12 million parameters, while the saccadic SNU model demonstrated ≈97% accuracy already at ≈1.3 million parameters. For further technical details, please refer to our research paper “On the visual analytic intelligence of neural networks“ available through open access.

Our results demonstrate how incorporating more biological inspiration into AI provides improved accuracy and more efficient operation, with model sizes reduced by an order of magnitude. Neuro-inspired AI provides an avenue for addressing the fundamental question of how to build lightweight, faster and sustainable AI solutions. Our research team is continuously exploring further biological inspiration, and we remain curious and optimistic for further benefits that neuro-inspired AI can bring to society.

Follow the Topic

-

Nature Communications

An open access, multidisciplinary journal dedicated to publishing high-quality research in all areas of the biological, health, physical, chemical and Earth sciences.

Related Collections

With Collections, you can get published faster and increase your visibility.

Women's Health

Publishing Model: Hybrid

Deadline: Ongoing

Advances in neurodegenerative diseases

Publishing Model: Hybrid

Deadline: Mar 24, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in