Predicting health outcomes from smartphone videos

Published in Healthcare & Nursing

Approximately 1.71 billion people have musculoskeletal conditions worldwide. These conditions are the leading global contributor to disability (1). Osteoarthritis, for example, is a chronic and painful musculoskeletal condition that will affect half of people in their lifetime (2). But how would disease discourse improve if we could identify, monitor, and treat musculoskeletal diseases from the comfort of a patient’s home?

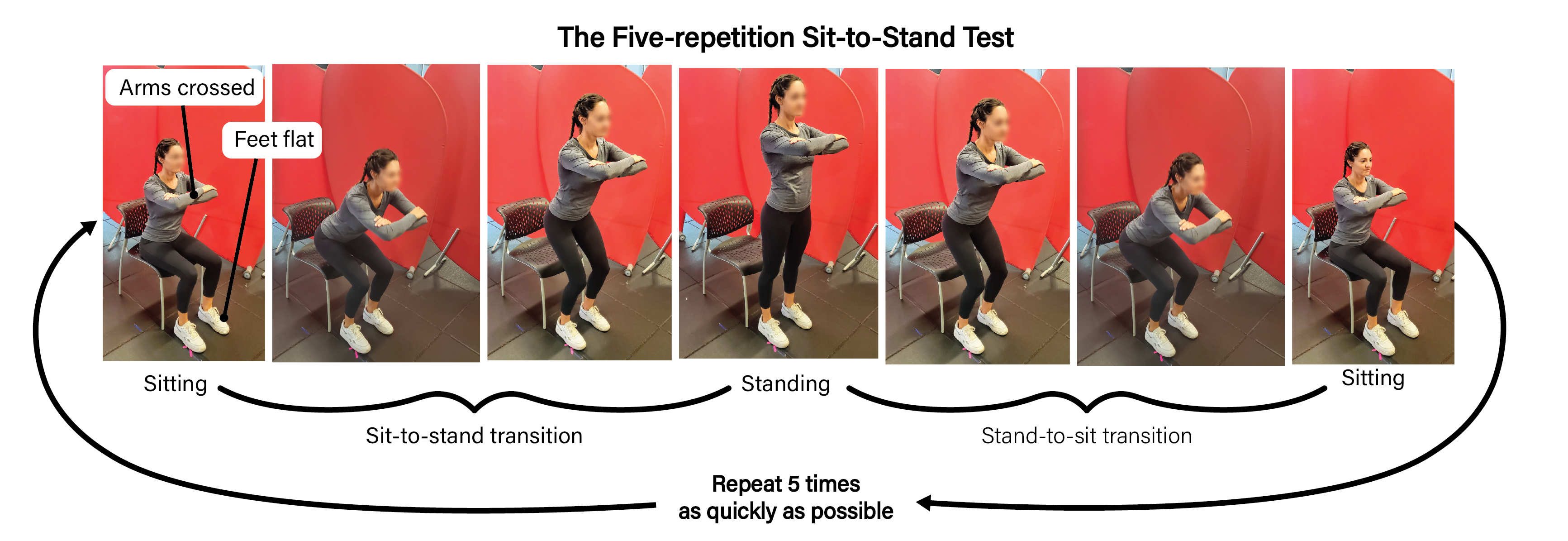

Clinicians widely use the time to complete five repetitions of the sit-to-stand transition (Figure 1) to evaluate an individual’s physical function, but timing alone isn’t specific enough to differentiate diseases. Quantitative motion analysis gives deeper, more specific health insights, such as the presence of osteoarthritis, but it currently requires expensive laboratory equipment and technical expertise (3). The goal of our study was to bring a biomechanics lab to participants' homes through their smartphones.

Figure 1. The Five-Repetition Sit-to-Stand Test. Individuals start sitting down with their arms crossed in front of their chest and their feet flat on the floor. They then rise to stand (sit-to-stand transition) and sit back down (stand-to-sit transition) five times as quickly as possible.

Pose estimation is a computer vision technique that estimates the location of a person or object in an image (4). Recent advances in pose estimation can automatically detect the location of a person’s joints from smartphone video (5), potentially enabling anyone with a smartphone to perform quantitative self-analysis of their motion. However, to date, studies have either been conducted in a laboratory or in a home setting but by trained researchers. It remains unclear whether pose estimation from self-recorded smartphone video can quantify movement with sufficient accuracy to predict health and physical function.

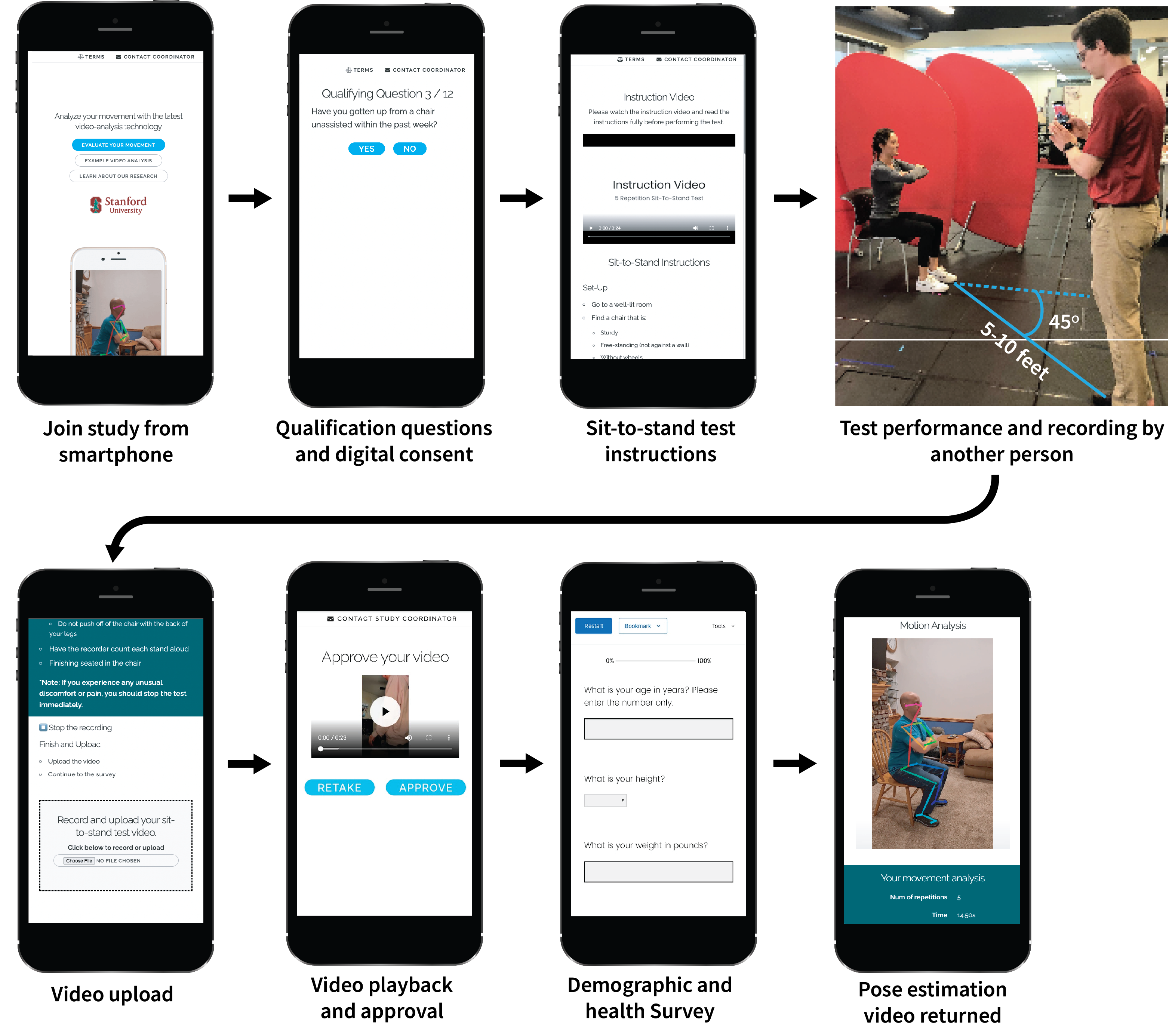

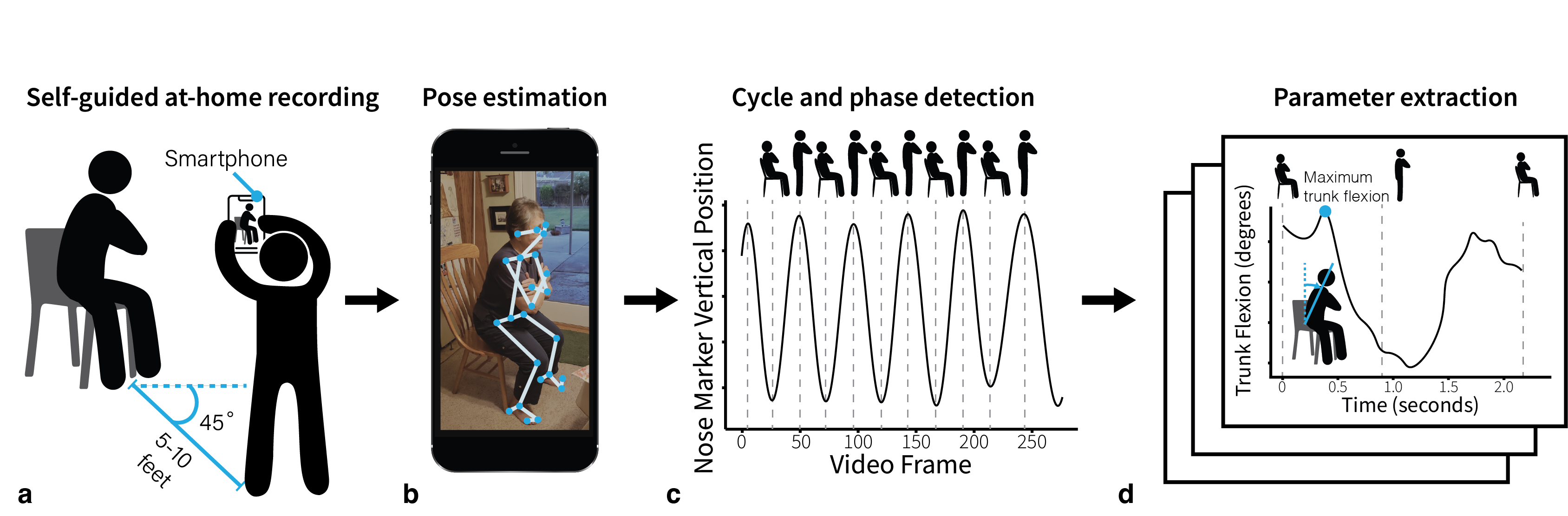

Thus, we examined if pose estimation from at-home smartphone videos collected by participants themselves can quantify movement with sufficient accuracy to predict health outcomes, including a diagnosis of osteoarthritis. To do this, we developed an online tool to guide individuals through recording and uploading a video of the five-repetition sit-to-stand test and completing a health survey (Figure 2). Then, our pipeline automatically performed pose estimation (4) and calculated kinematic parameters, such as joint angles (Figure 3).

Figure 2. Step-by-step details of the user-facing side of the web application.

Figure 3. An overview of our web application to collect and analyze movement data. a) Participants perform the five-repetition sit-to-stand test while an untrained individual records the test using only a smartphone or tablet from a 45-degree angle to capture a combined sagittal and frontal view. b) The video is uploaded to the cloud and a computer vision algorithm, OpenPose (4), computes body keypoints throughout the movement. c) Our tool computes the key transitions in each STS cycle (i.e., as the participant rises from the chair and returns to sitting). d) Our algorithms compute the total time to complete the test and several important biomechanical parameters, like trunk angle.

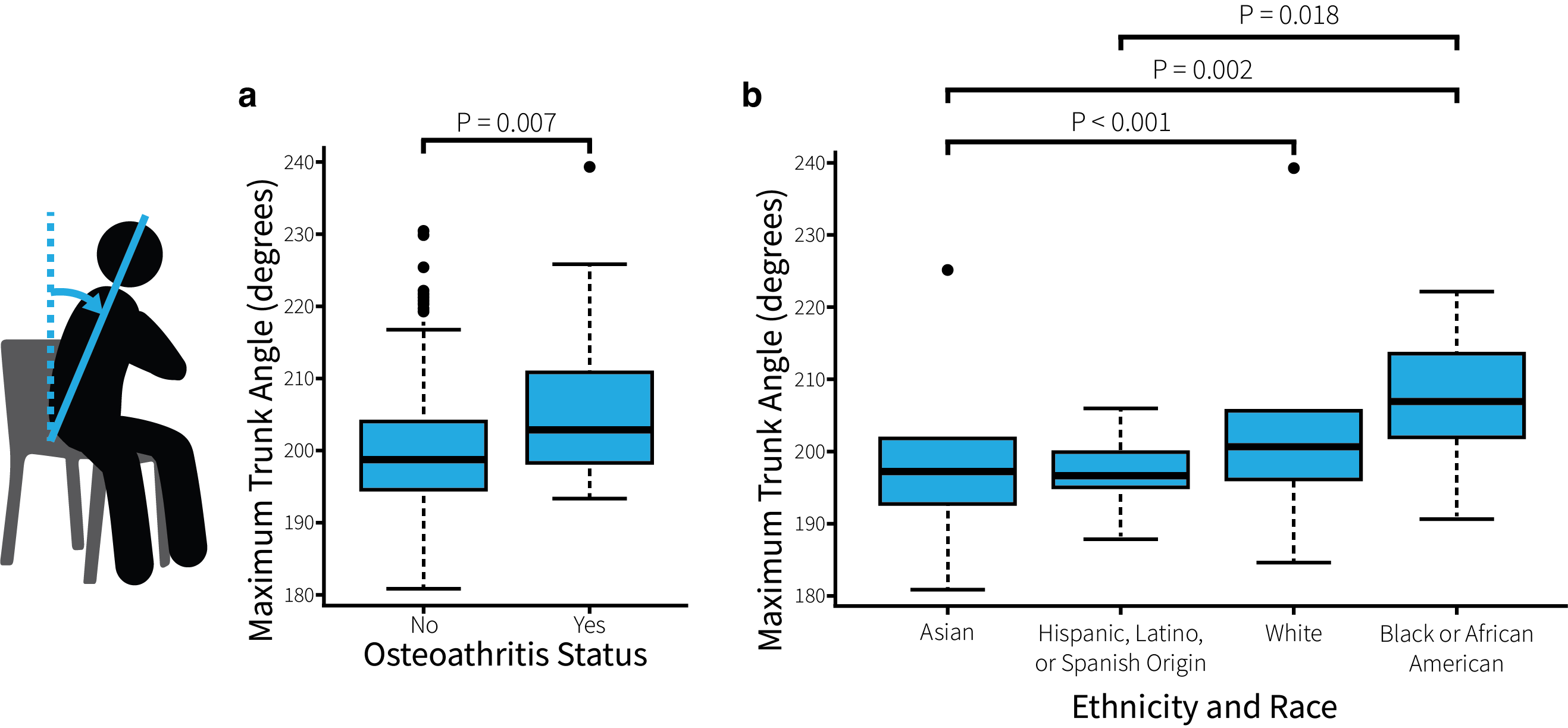

We deployed the application in a nationwide study with close to 500 participants ranging from 18 to 96 years of age (Figure 4). This sample is nearly 35 times the median sample size of traditional biomechanics studies (6). We found that measurements from at-home videos are sensitive enough to predict physical health and osteoarthritis. Specifically, trunk angle was associated with a knee or hip osteoarthritis diagnosis. And, with our large and diverse sample, we found differences in trunk angle across races. And a positive correlation between forward trunk angular acceleration and mental health. These findings remained significant even when controlling for additional factors including age, sex, BMI, and total sit-to-stand time. Our smartphone-based tool also reproduced the significant positive associations between STS time and health, age, and BMI found in prior lab-based studies (7-9).

Figure 4. A mosaic of the sit-to-stand videos collected from across the US.

Figure 5. Relationships between sit-to-stand parameters and survey measures as box-and-whisker plots. a) Trunk angle is larger in patients with hip or knee osteoarthritis. b) Trunk angle differs across race and ethnicity.

All data and the custom scripts for data processing and analysis are avilable open-source at https://github.com/stanfordnmbl/sit2stand-analysis. Custom scripts for the web application are available at https://github.com/stanfordnmbl/sit2stand.

In summary, our study demonstrates the ability to assess health using self-collected smartphone videos at home. This finding contributes to the growing evidence that mobile, inexpensive, easy-to-use web applications will enable decentralized clinical trials and improve remote health monitoring. With close to 80% of the world's population owning a smartphone, our technology may open unprecedented opportunities to track physical health and enable personalized interventions for musculoskeletal diseases like osteoarthritis.

References

- World Health Organization. (n.d.). Musculoskeletal health. World Health Organization. Retrieved September 7, 2022, from https://www.who.int/news-room/fact-sheets/detail/musculoskeletal-conditions

- Centers for Disease Control and Prevention. (2017, April 20). The Johnston County Osteoarthritis Project: Arthritis & Disability. Centers for Disease Control and Prevention. Retrieved September 7, 2022, from https://www.cdc.gov/arthritis/funded_science/current/johnston_county.htm

- Turcot, K., Armand, S., Fritschy, D., Hoffmeyer, P. & Suvà, D. Sit-to-stand alterations in advanced knee osteoarthritis. Gait Posture 36, 68–72 (2012).

- Cao, Z., Hidalgo, G., Simon, T., Wei, S.-E. & Sheikh, Y. OpenPose: Realtime Multi-Person 2D Pose Estimation using Part Affinity Fields. arXiv [cs.CV] (2018).

- Kidziński, Ł. et al. Deep neural networks enable quantitative movement analysis using single-camera videos. Nat. Commun. 11, 4054 (2020).

- Mullineaux, D. R., Bartlett, R. M. & Bennett, S. Research design and statistics in biomechanics and motor control. J. Sports Sci. 19, 739–760 (2001).

- van Lummel, R. C. et al. The Instrumented Sit-to-Stand Test (iSTS) Has Greater Clinical Relevance than the Manually Recorded Sit-to-Stand Test in Older Adults. PLoS One 11, e0157968 (2016).

- Lord, S. R., Murray, S. M., Chapman, K., Munro, B. & Tiedemann, A. Sit-to-stand performance depends on sensation, speed, balance, and psychological status in addition to strength in older people. J. Gerontol. A Biol. Sci. Med. Sci. 57, M539–43 (2002).

- Bohannon, R. W., Bubela, D. J., Magasi, S. R., Wang, Y.-C. & Gershon, R. C. Sit-to-stand test: Performance and determinants across the age-span. Isokinet. Exerc. Sci. 18, 235–240 (2010).

Follow the Topic

-

npj Digital Medicine

An online open-access journal dedicated to publishing research in all aspects of digital medicine, including the clinical application and implementation of digital and mobile technologies, virtual healthcare, and novel applications of artificial intelligence and informatics.

Related Collections

With Collections, you can get published faster and increase your visibility.

Digital Health Equity and Access

Publishing Model: Open Access

Deadline: Mar 03, 2026

Evaluating the Real-World Clinical Performance of AI

Publishing Model: Open Access

Deadline: Jun 03, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in