Smarter Tech for Smarter Living: Recognizing Human Activities with AI

Published in Healthcare & Nursing, Bioengineering & Biotechnology, and Electrical & Electronic Engineering

Introduction

Picture a smart home that knows when an elderly person has fallen and instantly alerts a caregiver. Or a phone that adjusts its notifications depending on whether you’re driving, jogging, or relaxing. These kinds of intelligent responses rely on something called human activity recognition (HAR), a field of study that blends artificial intelligence (AI), machine learning, and wearable or sensor-based technologies.

HAR is one of the cornerstones of building truly smart and assistive technologies. It involves the automatic detection of a person’s daily activities—such as walking, sitting, cooking, or sleeping—based on data collected by devices like smartphones, smartwatches, or home sensors. The applications of this technology are vast, from health monitoring and elderly care to fitness tracking, home automation, and even security systems.

In recent years, with the rapid rise of the Internet of Things (IoT)—a network of interconnected devices that share and analyze data—HAR has gained significant traction. Your smartwatch that counts steps and your fitness app that tracks sleep patterns? They both use HAR. And as the IoT continues to expand, the demand for better, faster, and more reliable activity recognition systems is only increasing.

But what goes on behind the scenes of these smart technologies? How do machines understand human behavior?

The Role of Machine Learning in HAR

At the heart of HAR systems lies machine learning—a branch of AI where computers learn from data to make decisions without being explicitly programmed. Essentially, machine learning models are trained on large sets of data representing different human activities. The system learns patterns in the data and uses them to identify or predict what activity a person is doing at any given time.

Over the years, many machine learning techniques have been used for this purpose—ranging from simple decision trees to more complex neural networks. While some methods have been widely explored and optimized, others are just beginning to be tapped for their full potential.

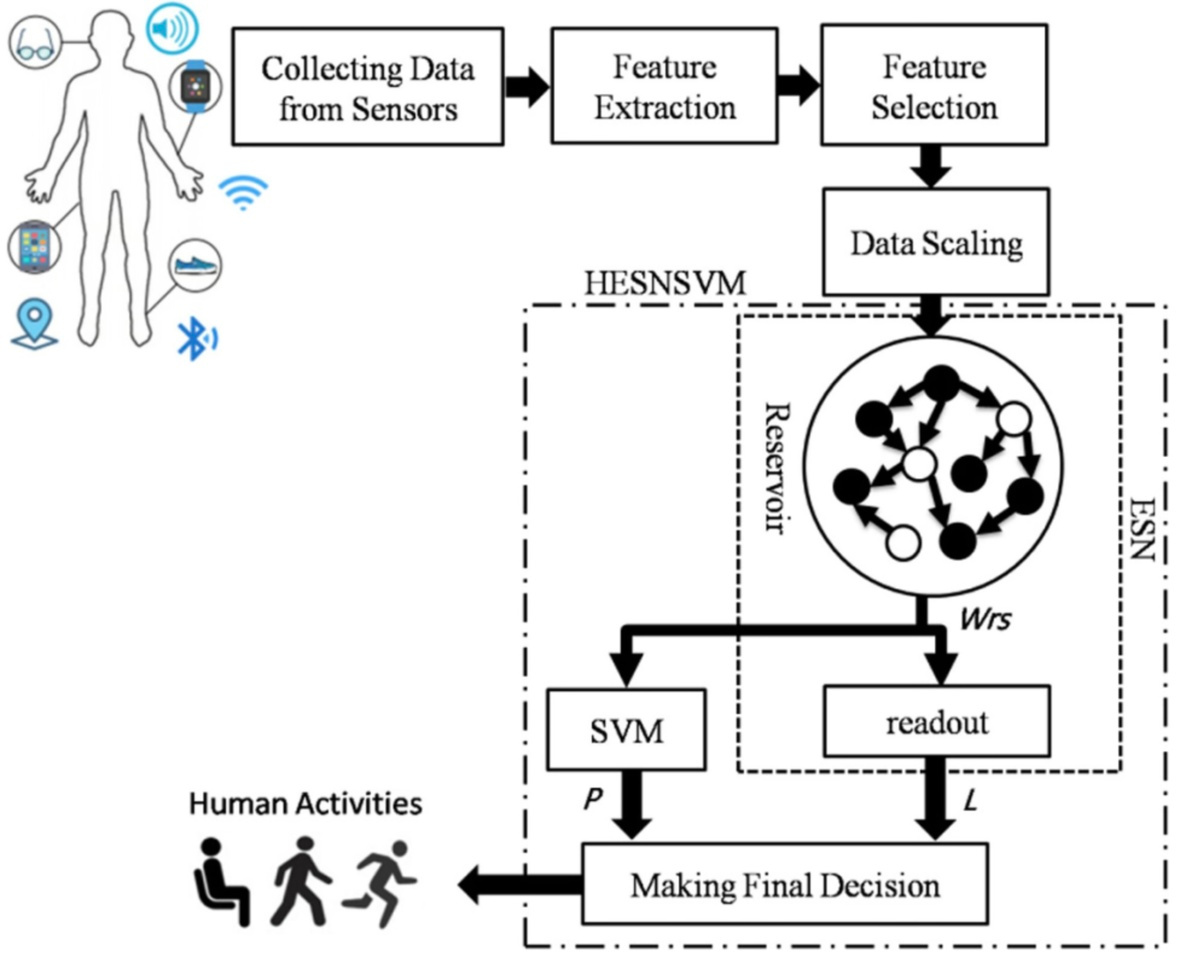

This is where our recent research comes in. We decided to explore an underused but promising technique known as the Echo State Network (ESN), combining it with a more established method called the Support Vector Machine (SVM). Our goal was to create a hybrid model that could recognize human activities more accurately and efficiently than existing models.

What is an Echo State Network?

The Echo State Network is a type of recurrent neural network (RNN). That might sound technical, but let’s break it down.

RNNs are a special kind of neural network designed to recognize patterns in sequences of data. This makes them particularly good at understanding time-based information, like speech, handwriting, or human activities over time.

What sets ESNs apart from other RNNs is their unique structure. Instead of learning all of the connections between neurons (which can be time-consuming and computationally expensive), ESNs keep most of their internal connections fixed and only train the output layer. This makes them much faster and easier to train, while still capturing complex time-based patterns in data.

Think of an ESN as a brain that listens and echoes past information, which helps it understand what’s going on now.

Why Combine ESN with SVM?

While ESNs are powerful at processing sequential data, they can sometimes struggle with classification—the part where the system decides what activity is actually happening. This is where Support Vector Machines shine.

SVMs are one of the most reliable and well-understood classification algorithms in machine learning. They work by finding the optimal boundary (or decision line) that separates different classes in the data. When you feed them clean, processed data, they do an excellent job at categorizing it accurately.

By combining ESN’s strength in pattern recognition with SVM’s accuracy in classification, we created a two-stage system: the ESN processes the raw sensor data and extracts meaningful patterns, and then the SVM classifies these patterns into specific activities like sitting, walking, or standing.

Reducing Complexity with Feature Selection

Another challenge in HAR systems is time complexity—essentially, how long it takes for the system to process data and make decisions. Too much data can slow the system down or reduce its accuracy. To tackle this, we applied a method called feature selection.

Feature selection is like cleaning out a cluttered closet. You go through all the available data and select only the most important parts—those that contribute most to accurate recognition. By removing irrelevant or redundant features, we were able to speed up our model and improve its performance at the same time.

How We Tested the System

To evaluate how well our ESN-SVM hybrid model works, we conducted multiple experiments using a dataset of human activities. The dataset included various labeled sensor data collected from individuals performing everyday tasks.

Our experiments focused on key metrics like accuracy, F1-Score, and processing time. We compared the performance of our technique with other popular models in HAR, and the results were very promising. Not only did our model perform with high accuracy, but it also showed efficiency in terms of time and computational resources.

What This Means for the Future

The success of our ESN-SVM model opens the door for smarter, more responsive technologies. Imagine:

-

Smart homes that better understand when a person needs help.

-

Wearable devices that detect early signs of health issues.

-

Personalized fitness apps that adapt in real-time based on your current activity.

-

AI-powered systems for rehabilitation that monitor and assist recovery exercises.

-

Improved safety systems for workers in high-risk environments.

While there is still more to explore—especially in terms of real-world deployment and handling noisy or unpredictable data—our work highlights the potential of underused machine learning methods like ESN in building the future of human-centric AI systems.

Final Thoughts

Human Activity Recognition is more than just a technical challenge; it’s a gateway to more empathetic and intelligent machines. With smarter algorithms and more efficient models, we can build systems that not only respond to human needs but anticipate them. Our ESN-based approach is one step toward that future, blending innovation with practicality.

If you'd like to explore the technical details of our work, feel free to watch the presentation and read the full paper here:

DOI: https://doi.org/10.1007/978-3-030-72802-1_11

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in