VISEM-Tracking, a human spermatozoa tracking dataset

Published in Research Data

Explore the Research

VISEM-Tracking, a human spermatozoa tracking dataset - Scientific Data

Scientific Data - VISEM-Tracking, a human spermatozoa tracking dataset

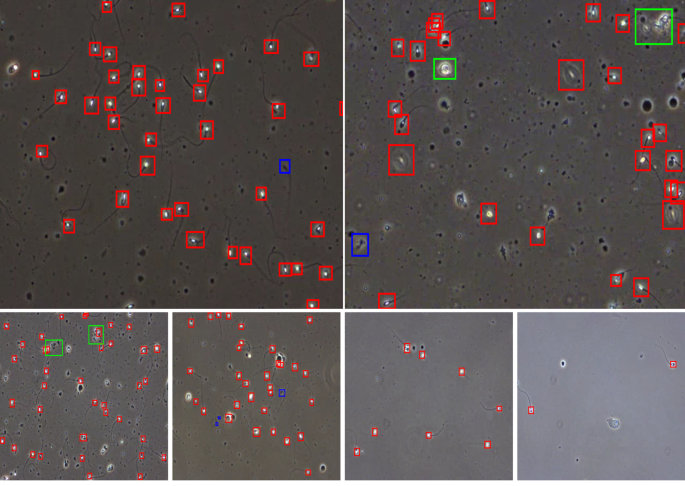

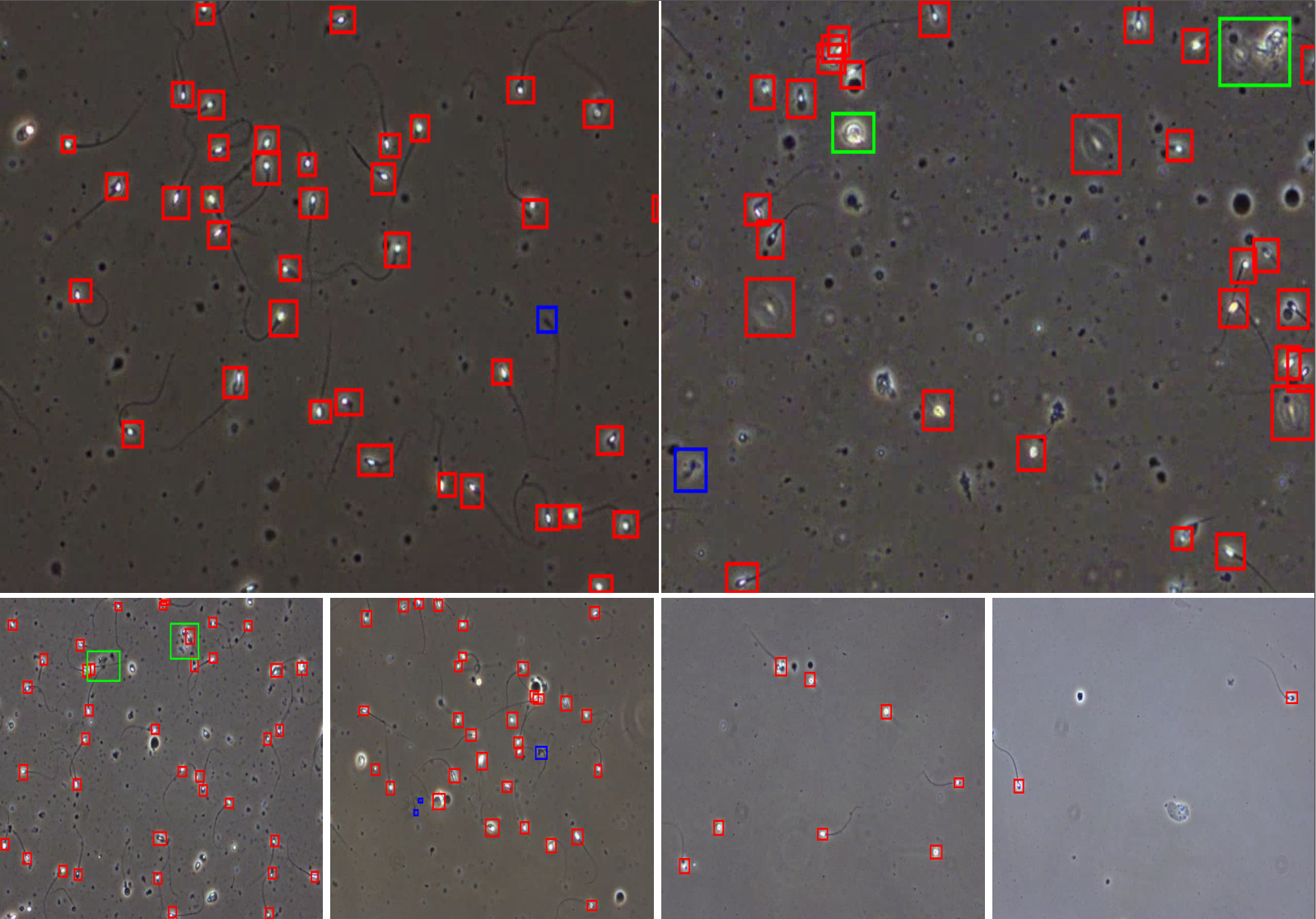

Manual sperm motility assessment using microscopy is challenging. It requires extensive training, making computer-assisted sperm analysis (CASA) a popular alternative. However, supervised machine learning needs more data to enhance accuracy and reliability. To address this, researchers have introduced the VISEM-Tracking dataset, containing 20 video recordings (29,196 frames) of wet sperm preparations with manually annotated bounding-box coordinates and expert-analyzed sperm characteristics.

The VISEM-Tracking dataset stands out as a unique and invaluable resource for advancing computer-assisted sperm analysis by providing a comprehensive collection of diverse, high-quality sperm video recordings with expert annotations. This addresses the current lack of data and facilitates the development of more accurate and reliable machine-learning models in sperm motility assessment.

The YOLOv5 deep learning model, trained on the VISEM-Tracking dataset, has shown promising baseline sperm detection performance, indicating the dataset's potential for training complex models to analyze spermatozoa.

VISEM-Tracking is available on Zenodo under a Creative Commons Attribution 4.0 International (CC BY 4.0) license, featuring 30-second videos from 20 different patients with annotated bounding boxes. Additional 30-second video clips from both the annotated and unlabelled portions of the VISEM dataset are also provided, making it suitable for future research in semi- or self-supervised learning.

Access the dataset here: https://zenodo.org/record/7293726

Read the full paper: https://www.nature.com/articles/s41597-023-02173-4

Follow the Topic

-

Scientific Data

A peer-reviewed, open-access journal for descriptions of datasets, and research that advances the sharing and reuse of scientific data.

Related Collections

With Collections, you can get published faster and increase your visibility.

Data for crop management

Publishing Model: Open Access

Deadline: Apr 17, 2026

Data to support drug discovery

Publishing Model: Open Access

Deadline: Apr 22, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in