Watching “101 Dalmatians” in the MRI: a new multimodal dataset to explore sensory deprivation, development, and brain plasticity

Published in Neuroscience, General & Internal Medicine, and Behavioural Sciences & Psychology

What happens in the brain when we watch a movie — and what if one of our senses has never been active? Our recent article, published in Scientific Data (link), presents an open-access fMRI dataset designed to answer this question. Participants with typical development, congenital blindness, or congenital deafness experienced the film 101 Dalmatians inside the MRI scanner, either in audiovisual, only-audio, or only-visual conditions. This dataset captures brain activity in a naturalistic context — people engaging with a familiar, emotionally rich story — while providing the structure and annotation depth needed for computational and cognitive analyses. It bridges experimental control and ecological validity, opening new avenues for the study of sensory processing and cross-modal plasticity.

A naturalistic and inclusive approach to brain research

Traditional neuroscience experiments often rely on artificial tasks and isolated stimuli (flashing dots, tones, or words). Here, we wanted to move closer to real life. Movies are dynamic, multimodal, and narrative: they involve perception, attention, language, emotion, and memory all at once. Using 101 Dalmatians as a common stimulus allowed us to probe how the brain integrates or compensates sensory information when one channel — vision or hearing — is absent from birth.

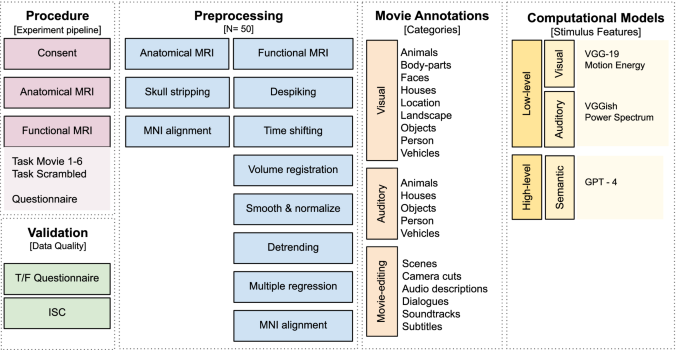

The participants were divided into three groups: individuals with typical development, congenitally blind individuals, and congenitally deaf individuals. Each group watched or listened to the film under three conditions — audiovisual, audio-only, or visual-only — while undergoing fMRI scanning. This design provides an exceptionally rich dataset for comparing brain responses across sensory modalities and developmental histories.

Multimodal annotations: from pixels and sounds to meaning

To make the dataset useful beyond raw imaging data, we enriched it with detailed annotations describing the film’s perceptual and semantic content. Visual features were extracted using motion-energy models and the deep network VGG-19; auditory features came from sound descriptors and the VGGish model. On the semantic level, we used GPT-4 to generate embeddings based on the film’s subtitles and audio descriptions, linking each moment in the movie to a vector representation of its meaning.

This multimodal structure enables researchers to ask new questions:

-

How do brain regions synchronize across people exposed to the same story, and how does this synchronization change across sensory conditions?

-

Which cortical areas in blind or deaf participants process information that typically belongs to a “missing” modality?

-

How are high-level semantic representations distributed when sensory input differs?

Why this dataset matters

This project contributes to several ongoing debates in neuroscience:

-

Plasticity and reorganization: How do “visual” or “auditory” brain areas adapt when they never receive their typical inputs?

-

Cross-modal integration: Can higher-level cognitive functions, such as language or imagery, recruit sensory cortices in individuals with congenital deprivation?

-

Naturalistic neuroscience: Can studying the brain in complex, narrative settings complement traditional, reductionist experiments?

By combining naturalistic stimuli, developmental diversity, and modern computational annotations, the dataset provides a foundation for addressing these questions with unprecedented depth.

A resource for the neuroscience and AI communities

Beyond sensory neuroscience, the dataset also offers value for computational modeling, artificial intelligence, and neuro-AI research. The inclusion of deep neural network descriptors (VGG19, VGGish) and large language model embeddings (GPT-4) bridges biological and artificial representations, allowing researchers to test how machine learning models align with human brain responses to rich audiovisual narratives.

Open science and collaboration

The entire dataset, including imaging data, annotations, and documentation, is openly available under standard sharing formats. Our goal is to foster broad collaboration: from neuroscientists studying perception and development to data scientists, psychologists, and AI researchers interested in bridging human and artificial intelligence.

We hope that this work encourages further exploration of naturalistic, inclusive approaches to brain research — where variability is not noise but information. The diversity of sensory experiences represented here can help redefine what “typical” brain organization means, and how the human mind constructs meaning from the world, with or without certain senses.

Follow the Topic

-

Scientific Data

A peer-reviewed, open-access journal for descriptions of datasets, and research that advances the sharing and reuse of scientific data.

Related Collections

With Collections, you can get published faster and increase your visibility.

Data for crop management

Publishing Model: Open Access

Deadline: Apr 17, 2026

Invertebrate omics

Publishing Model: Open Access

Deadline: May 08, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in