A Prosocial Fake News Intervention with Durable Effects

Published in Social Sciences

Explore the Research

A prosocial fake news intervention with durable effects - Scientific Reports

Scientific Reports - A prosocial fake news intervention with durable effects

The motivational basis of being prosocial: Research shows that prosocial motivations -compared to egoistic ones- can drive people to work harder, smarter, safer, and more collaboratively. For instance, in hospitals, healthcare professionals were significantly more likely to appropriately follow hand hygiene practices when reminded of patients’ safety, compared to personal safety. Alternatively, in a school setting, students performed better in boring and monotonous tasks when it was for prosocial motives. If prosocial motivations can be such efficient drives of persuasion in many contexts, why shouldn’t we apply them in the fight against fake news?

Moving beyond individual reasons to spot fake news: Though many previous studies focused on the individual cognitive capacities of news consumers to reduce susceptibility against misinformation (e.g., nudged people to spot fake news, motivated them to build digital competencies, or inoculated them with certain skills to approach the news with more suspicion), we rather turned toward prosocial motivations in the present fake news intervention and aimed to change the reasons and the meaning of why fake news detection can be (pro)socially important to the participants. Instead of putting participants in the inferior position of someone in need of learning to avoid incompetence, we addressed them as digital experts who can contribute to the digital competency of their loved ones.

Hungarian context, an informational autocracy: Our research was conducted in Hungary, an Eastern European country that has been experiencing “democratic backsliding” in the past decades. The systemic disinformation campaigns orchestrated by the illiberal Hungarian government, and Russia’s soft influence in social media, using online astroturfing techniques pose danger to democratic institutions. The context, therefore, has relevance, as governmental propaganda is neither an eminent promoter of analytical thinking in general nor of critical news consumption in particular. Yet, despite its enormous pertinence, no psychological fake news intervention has been conducted in the country before. Furthermore, family- and security-related values have been reported as most important to respondents since their first measurement in Hungary. Focusing on one’s narrow communities in Hungarian society has deep historical roots and the sociological literature has developed a strong consensus on the dominance of communal prosocial goals involving family and close friends over distal and broader societal goals.

This is what happened in the intervention: Our family-oriented prosocial wise intervention was tested among Hungarian young adults (N=801) with a one-month follow-up via a behavioral fake news recognition task. Participants of the prosocial intervention condition (contrasting to the control) were expected to evaluate fake news less accurately and would intend to share fake news to a smaller extent, even after controlling for relevant individual differences. We also assessed the effect of the treatment on “bullshit receptivity” scores after controlling for pre-intervention “bullshit scores”.

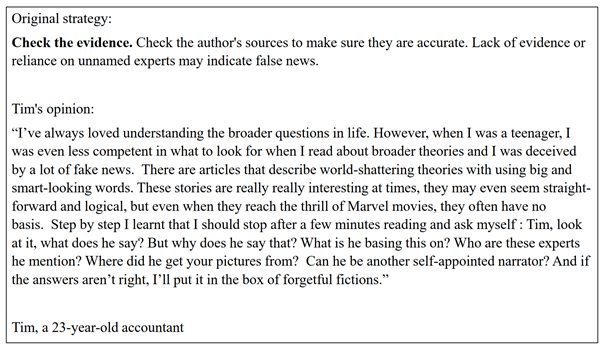

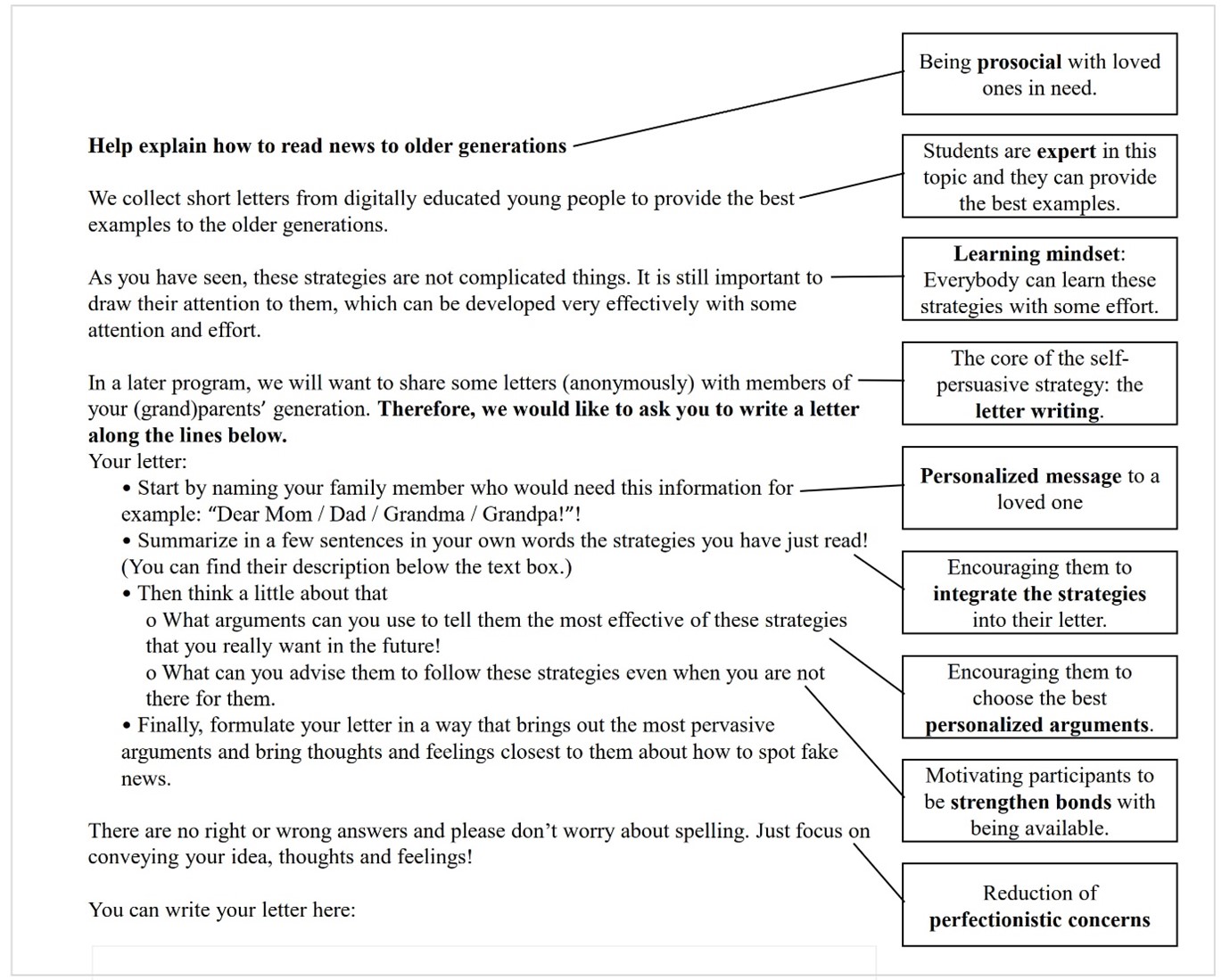

In the intervention material, the survey was framed as a contribution to an online media education program targeting parents’ and grandparents’ generations. First, participants reviewed six scientifically supported strategies (all adapted from Guess et al., 2020) explained through peer testimonials to spot online misinformation (skepticism for headlines; looking beyond fear-mongering; inspecting the source of news; checking the evidence; triangulation; considering if the story is a joke; see an example in Figure 1). Based on prior research, these testimonials were supposed to provide normative information about other students’ negative experiences of not spotting misinformation and positive experiences of identifying misinformation. Second, participants were requested to write a brief letter to a close family member in which they summarize the six strategies and were asked to reflect on the best arguments and advice that would convince their relatives to implement these strategies in their everyday lives (see Figure 2).

Figure 1. A testimonial explaining one of the six strategies

Figure 2. Psychological mechanisms of the self-persuasive message. After reading the six testimonials, participants wrote a letter to a loved one in which they explained the strategies.

The structure of the control condition was very similar to that of the intervention; however, the topic of fake news did not appear in the control materials as it was framed as an advice-giving task about how to use social media sites like Facebook, appropriately. It described practices of older generations that young adults find awkward and provided advice for avoiding these embarrassing behaviors. In the materials, participants found six examples of implicit norm violations in social media use (mixing up private messaging with the Facebook News feed; sending virtual flowers on someone’s timeline for birthdays; incorrect use of emojis; uploading inappropriate profile pictures; anomalies during video chat; sending inappropriate invites to online games). Subsequently, similarly to the treatment, they were asked to compose a letter to an elderly relative summarizing these practices and to share the best advice to avoid these social media behaviors. In sum, the content of the control material was related to appropriate behaviors in social media sites without referring to news content, fake news, and misinformation.

The outcome measure: To assess fake news accuracy ratings, we used the protocol of Pennycook and Rand (2019) and asked participants to evaluate the accuracy of eight carefully pretested and culturally adjusted real and eight fake news items (half of them with political content and half of them with apolitical content) on four-point scales (not at all accurate/ not very accurate/ somewhat accurate/ very accurate). Fake and real news sharing intentions were measured post-intervention separately for each news item: “Would you consider sharing this story online (for example, through Facebook)?” with four response options: I never share any content online/ no/ maybe/ yes. Bullshit receptivity was assessed with ten items such as “Interdependence is rooted in ephemeral actions”. Respondents filled out half of the scale pre-intervention, the other half post-intervention, and the full scale again in the follow-up. The response scale ranged from 1 (not at all profound) to 5 (very profound).

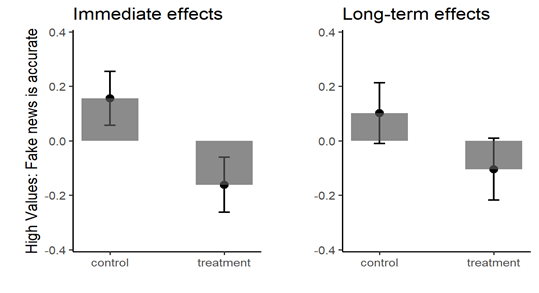

Main results: Overall, our results showed that the intervention produced significant immediate, (d=0.32), and long-term (one-month follow-up) accuracy rating improvements (d=0.22), relative to the control condition (see Figure 3). Nevertheless, the treatment produced neither immediate nor long-term changes in sharing intentions relative to the control condition.

Figure 3. Accuracy evaluation of fake news immediately after the intervention (left panel) and four weeks later (right panel)

Notes: Error bars represent standard errors. The y-axis represents standardized scores in terms of fake news accuracy ratings controlled for real news accuracy ratings. Higher scores indicate that fake news is accurate.

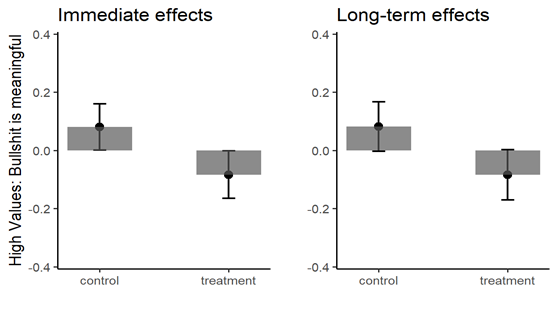

Furthermore, the intervention produced a significant immediate (d=0.16), and long-term (one-month follow-up) improvement in “bullshit receptivity” (d=0.17), relative to the control condition (see Figure 4).

Figure 4. Bullshit receptivity immediately after the intervention (left panel) and four weeks later (right panel)

Notes: Error bars represent standard errors. The y-axis represents standardized scores in terms of “bullshit receptivity” ratings controlled for pre-intervention bullshit receptivity ratings. Higher scores indicate beliefs in bullshit are meaningful.

These short- and long-term effects were present after considering real news accuracy ratings, and relevant sociodemographic, digital, and psychological individual differences, and the decrease in the effect was not significant over a month. All in all, the intervention had a durable impact on the recognition of fake news and receptivity to “bullshit”; but it did not change fake news sharing intentions in the short- or the long-term.

Concluding remarks: Our results show that family-related prosocial values can open new horizons in the fight against misinformation especially in those countries where interdependent values are important, such as in Hungary. The present intervention is an example of leveraging family values to motivate people to use their cognitive capacities while consuming news in a context where mainstream media is a vector of seriously problematic news content. Therefore, more vigilance about news veracity could prove to be a brake in the slide back from democracy.

Follow the Topic

-

Scientific Reports

An open access journal publishing original research from across all areas of the natural sciences, psychology, medicine and engineering.

Related Collections

With Collections, you can get published faster and increase your visibility.

Reproductive Health

Publishing Model: Hybrid

Deadline: Mar 30, 2026

Women’s Health

Publishing Model: Open Access

Deadline: Feb 28, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in