FHIBE: A New Standard for Ethical AI Evaluation

Published in Social Sciences, Computational Sciences, and Philosophy & Religion

When my team at Sony AI began working on fairness in computer vision, we quickly realized that the problem wasn’t only in the models — it was in the data used throughout the development process. Despite growing attention to fairness in AI, the datasets used to benchmark model performance have hardly evolved. Many rely on web-scraped images, gathered without consent, diversity, or the necessary annotations to understand how models perform across different populations. AI developers wanting to check their models for bias were thus left in a difficult position: either use problematically sourced public datasets that might expose them to privacy and IP risks or choose not to benchmark their models for bias.

FHIBE (Fair Human-Centric Image Benchmark) was created to offer a new path forward. FHIBE is the world’s first publicly available, consent-driven, globally diverse dataset for evaluating bias across a wide variety of human-centric computer vision tasks.

When we started FHIBE, we assumed that applying ethical best practices that our team and others in the field had set out would not be so difficult, but it turned out that there were many challenges to implementing such best practices. The project took over three years, diverse authors spanning many sociotechnical areas of expertise, and the support of many cross-functional teams, not to mention the partnership of our data vendors, quality assurance specialists, and the many global participants in our dataset.

From the beginning, the FHIBE project centered on human dignity and agency. Consent and compensation for data-rightholders were at the heart of our data collection design. The informed consent process was designed to comply with data protection laws, including the EU’s GDPR, clearly explaining how images and annotations would be used and ensuring that participants could revoke consent at any point. Participants were also fairly compensated at or above local minimum wage, in line with International Labour Organization standards. The images were also manually reviewed to remove identifiable background content, such as license plates or bystanders, ensuring that what remained was consensually collected.

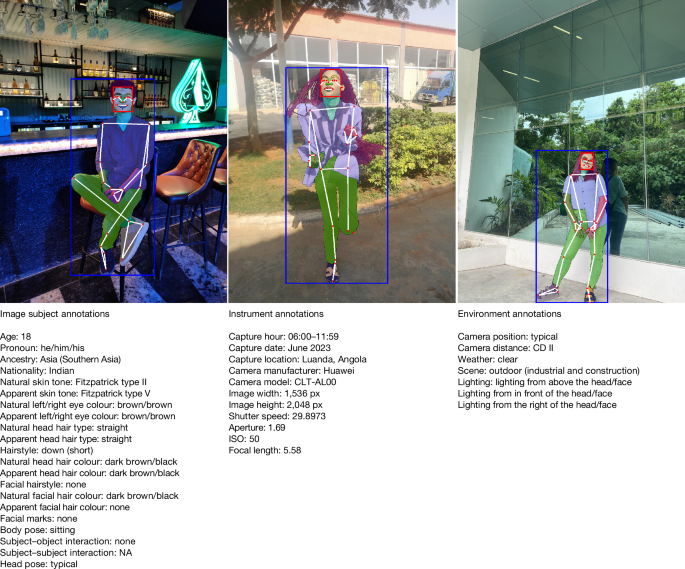

Rather than relying on inferred attributes or third-party labeling, FHIBE uses self-reported demographic data, reducing the risks of individuals being mislabelled. The richness of FHIBE’s annotations on demographics, physical attributes, environment, and instruments further enable AI developers to conduct granular bias diagnoses to pinpoint failure modes of their models. This can empower them to better understand how to improve their model’s performance and build fairer models.

Importantly, FHIBE’s Terms of Use restrict its use for bias evaluation and mitigation, not model training. The demographic information thus can only be used to evaluate bias rather than perpetuate it.

As part of our project, we tested models like RetinaFace and ArcFace using FHIBE to evaluate face detection and verification across varying skin tones, facial structures, and lighting environments. Pose estimation and person detection models were also assessed for accuracy disparities.

Using FHIBE, we found that models tended to perform better for younger individuals and those with lighter skin tones, though results varied by model and task. This variability highlights why intersectional bias testing across combinations of pronoun, age, ancestry, and skin tone is essential. FHIBE’s annotation depth allows for such granular diagnoses, helping researchers identify not just whether disparities exist, but why they emerge.

When applied to foundation models like CLIP and BLIP-2, FHIBE revealed biases that would be difficult to detect using existing benchmarks. CLIP misgendered individuals based on hairstyle and presentation. BLIP-2 disproportionately associated African ancestry participants with rural environments. It also disproportionately gave toxic responses associating those with African ancestry with criminal activity.

By using FHIBE during model validation or deployment testing, teams can better understand how AI systems behave across identities, environments, and lighting conditions—before those systems are released into everyday use.

We hope FHIBE will establish a new standard for responsibly curated data for AI systems by integrating comprehensive, consensually sourced images and annotations. FHIBE is proof that global, consent-driven data collection is possible, even if difficult.

To learn more about FHIBE, watch our short film, “A Fair Reflection,” and to access FHIBE, visit https://fairnessbenchmark.ai.sony/.

Follow the Topic

-

Nature

A weekly international journal publishing the finest peer-reviewed research in all fields of science and technology on the basis of its originality, importance, interdisciplinary interest, timeliness, accessibility, elegance and surprising conclusions.

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in

From Counting Vulnerabilities to Calculating the Existential Net Security Balance

AI security tools announce: We discovered 500 vulnerabilities. We pose the existential question that the machine (B) cannot ask itself: What is the net security balance of your intervention?

We prove in this research that the technical system (B) is trapped within a limited existential space: it computes quantity (complexity, capacity) in linear mechanical time (t), yet remains blind to quality, place, and existential time (τ). It moves without awareness of the critical moment when it crosses its existential saturation threshold without knowing where it stands in the system's flow, for it calculates computational location while remaining ignorant of existential place:

ε_i(t) = max(ε_min, ε_0i · e^{−γ_i L_i(t)})

At this crossing, the system loses its internal logical coherence and hallucinates, generating new vulnerabilities as corrupted outputs:

f_i(t) ~ Poisson(λ_i(t)) where λ_i(t) = β_i · max(0, (S_i(t) − ε_i(t))/ε_i(t))

The existential catastrophe is this: these newly created vulnerabilities remain invisible to (B) itself. They emerge within a mathematically blind zone described by Bouzid's First Theorem:

f(B) ∉ 𝒪(B) ← Side effects lie outside the system's domain of self-knowledge

This is not a technical flaw to be patched, but an existential limit: any attempt to program a self-monitor inside (B) becomes part of the problem itself, subject to the same collapse threshold.

The Irrefutable Empirical Evidence: The Prevailing Methodology Creates the Vulnerabilities It Claims to Fix

Three rigorously documented studies confirm that 40% of automated fixes generate new vulnerabilities:

• Symbolic analysis tools (KLEE): Announced 56 vulnerabilities, yet created 17 new ones omitted from their report.

• Programming assistant (GitHub Copilot): While patching SQL injection flaws, introduced path traversal vulnerabilities in 40% of cases.

• Dynamic fuzzing tools: During filesystem testing, corrupted on-disk structures and triggered actual data loss.

The conventional methodology trusts naively in counting discoveries. We reject this illusory trust and shift to calculating the net security balance:

Net Balance = Discovered Vulnerabilities (D_i) − Created Vulnerabilities (C_i)

This calculation is self-impossible for (B), as it requires knowledge of C_i—a knowledge existentially forbidden to it.

The Structural Solution: Existential-Mechanical Integration B + F = N_f

The solution lies not in making (B) smarter, but in introducing the human sovereign factor (F) as an external existential event. (F) operates in contextually situated existential time (τ), possessing what the machine lacks:

• Knowledge of place: Understanding the system's holistic context and priorities

• Calculation of existential time: Recognizing the critical moment τ for intervention before collapse

• Vision of hallucination: Detecting corrupted data before it materializes as vulnerability

This translates into a three-layer dynamical model:

• Internal Alert (B): Alert_i(t) = 𝟙_{S_i(t) ≥ ε_i(t)}

• Existential Decision (F): d_i(τ) = F(Alert, Context, History)

• Normative Integrity (N_f): n_{f_i}(t) = ρ₁(t)·ρ₂(t)·ρ₃(t)·‖y_i(t)‖

Normative integrity (N_f) is not a simple transformation equation, but an existential state that accumulates only when (F) enforces three purity conditions:

• Absence of current hallucination (ρ₁)

• Effectiveness of prior preventive intervention (ρ₂)

• Contextual alignment with pre-established ethical values (ρ₃)

The Existential Conclusion: Redefining Security as Relationship, Not Technical Property

We do not offer yet another technical improvement in the security arms race. We propose a radical re-foundation:

True security is not an internal property of the machine (B), but an existential relationship between human will (F) and execution mechanism (B).

Current systems ask: How do we make it smarter?

We ask: How do we ensure it collapses responsibly when it exceeds its existential limits?

This research transforms philosophical critique into a practical mathematical model offering:

• Quantitative fragility metrics (γ_i, ε_min)

• Programmable protocols for preventive intervention

• A computational framework for net security balance

The Challenge We Pose

Any security system that fails to disclose its methodology for calculating vulnerabilities it generates during its own search builds security on shifting sands. True security integrity begins not by denying the existential limits of our technology, but by constructing sovereign bridges (F) across these abysses—not by pretending they do not exist.

You announce: 'We discovered 500 vulnerabilities.'

We ask: How many vulnerabilities did your intervention add to the total system?

(F) knows (f)—it knows when (B) hallucinates.

(B) cannot know this—it is trapped within a closed circle.

This is not an opinion. This is an existential mathematical limit.

https://zenodo.org/records/18602472

https://www.academia.edu/164572948/The_Net_Security_Balance_Why_B_Cannot_Compute_the_Vulnerabilities_it_Generates_During_Discovery_