Multi-source Heterogeneous Dataset for Boosting the Expandable Medical Artificial Intelligence

Published in Healthcare & Nursing

With the development of artificial intelligence (AI) and deep learning, automatic disease screening via fundus imaging has become a popular topic for researchers and clinical practitioners1,2. Many algorithms have been investigated, and some have already been used in clinical practice3-5. In the field of ophthalmology, the quality of fundus images is key to the performance of diagnosis models, as an important preliminary step. Therefore, image quality assessment (IQA) is vital for automated systems.

The most reliable method to assess an image quality requires doctors to assess the original images, but it entails a heavy workload. During the year of my doctoral graduate stage in 2014 to 2016 at Zhejiang University, our research group had been struggling to publish our first IEEE Transactions on Medical Imaging paper6. In that work, we investigated proposes an algorithm capable of selecting images of fair generic quality that would be especially useful to assist inexperienced individuals in collecting meaningful and interpretable data with consistency. We firstly modified and reconstructed a high image quality portable non-mydriatic fundus camera and compared it with the tabletop fundus camera to evaluate the efficacy of the new camera in detecting retinal diseases. The machine learning algorithm is based on three characteristics of the human visual system, multi-channel sensation, just noticeable blur, and the contrast sensitivity function to detect illumination and color distortion, blur, and low contrast distortion, respectively7. Later, we propose a new image enhancement method to improve the quality of degraded images8, because degraded images caused by the aberrations of the eye can disguise lesions, and a diseased eye can be mistakenly diagnosed as normal. This retinal image enhancement was employed to assist ophthalmologists in more efficient screening of retinal diseases and the development of AI assisted diagnosis9.

Over the past years, deep learning based IQA has been updated to score the fundus images10. Once a model is trained, it can produce fast and real-time predictions, improve the workflow and optimize image acquisition, making the whole process more efficient. To train an excellent model, a well-collected dataset is very important. Several fundus IQA datasets, which are summarized in Table 1, have been established for public use: DRIMDB, Kaggle DR Image Quality, EyeQ Assessment, DeepDRiD, etc. Many IQA studies have been carried out on these datasets. However, most of the existing image quality assessment datasets are single-center datasets, disregarding the type of imaging device, eye condition, and imaging environment.

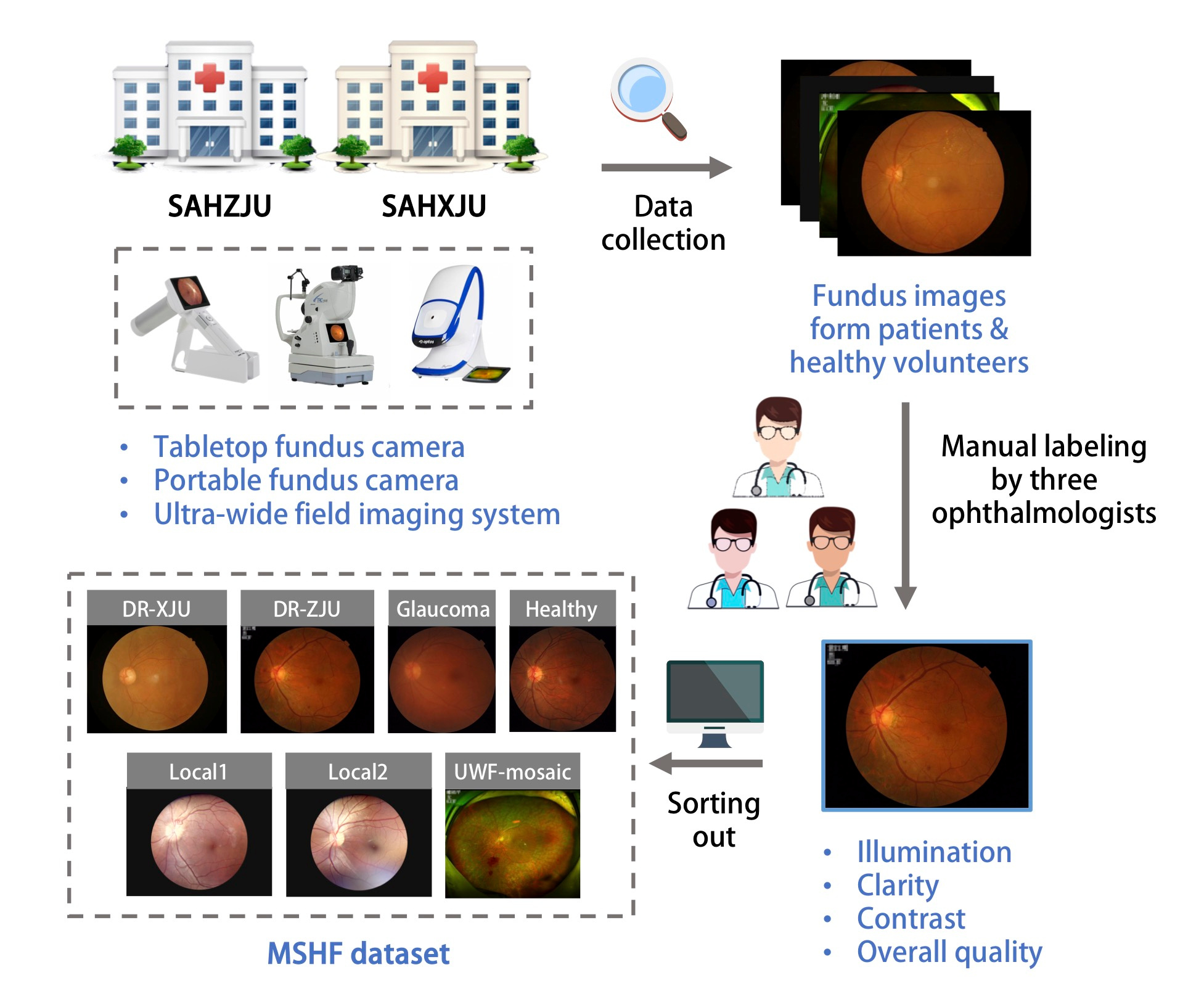

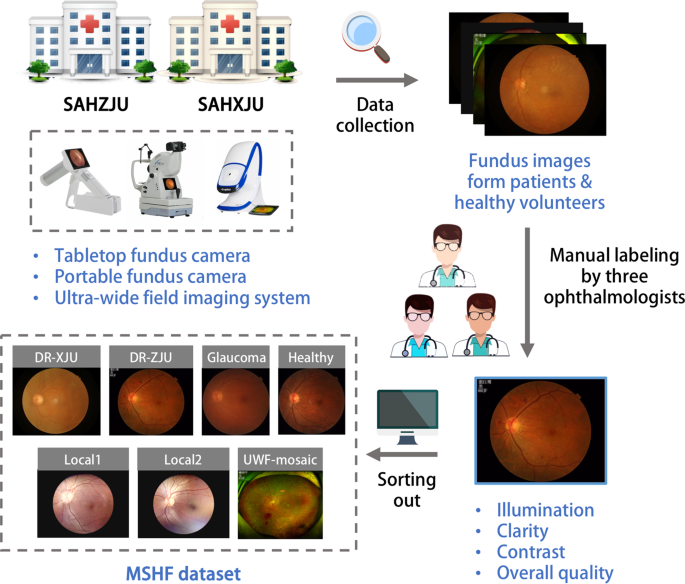

Taking all of these factors into consideration, a public fundus IQA dataset consisting of various forms of fundus images from patients and healthy volunteers with detailed quality labels would be fundamental. In this paper, we propose a multi-source heterogeneous fundus (MSHF) dataset that contains 500 CFP, 302 portable camera images and 500 UWF images with various source domains from DR and glaucoma patients as well as normal people. For each image, 4 labels are provided: illumination, clarity, contrast and overall quality (Figure 1). We believe that the publication of the MSHF dataset will considerably facilitate AI-related fundus IQA research and promote translation from technology to clinical use.

Table 1. Summarization of publicly available fundus image quality assessment datasets.

|

Dataset |

Year |

Number |

Quality label |

Disease |

Annotators |

|

DRIMDB |

2014 |

216 CFP images |

'good', 'bad' or 'outlier' |

DR, non-DR |

NA |

|

Kaggle DR Image Quality |

2018 |

88702 CFP images |

'good' or 'poor' for overall quality |

DR, non-DR |

NA |

|

EyeQ Assessment |

2019 |

28792 CFP images |

'good', 'usable' or 'reject' for overall quality |

DR, non-DR |

2 |

|

DeepDRiD |

2022 |

2000 CFP images and 256 UWF images |

score criteria 0~10 for artifact, clarity, field definition and 0/1 for overall quality |

DR, non-DR |

3 |

|

MSHF (proposed) |

2022 |

500 CFP images, 500 UWF images and 302 portable camera images |

0/1 for illumination, clarity, contrast and overall quality |

DR, healthy, glaucoma |

3 |

Figure 1. An overview of the study approach and methodology.

References

1 Kermany, D. S. et al. Identifying Medical Diagnoses and Treatable Diseases by Image-Based Deep Learning. Cell 172, 1122-1131.e1129, doi:10.1016/j.cell.2018.02.010 (2018).

2 Li, J. O. et al. Digital technology, tele-medicine and artificial intelligence in ophthalmology: A global perspective. Progress in retinal and eye research 82, 100900, doi:10.1016/j.preteyeres.2020.100900 (2021).

3 Ting, D. S. W. et al. Development and Validation of a Deep Learning System for Diabetic Retinopathy and Related Eye Diseases Using Retinal Images From Multiethnic Populations With Diabetes. Jama 318, 2211-2223, doi:10.1001/jama.2017.18152 (2017).

4 De Fauw, J. et al. Clinically applicable deep learning for diagnosis and referral in retinal disease. 24, 1342-1350, doi:10.1038/s41591-018-0107-6 (2018).

5 Ruamviboonsuk, P. et al. Real-time diabetic retinopathy screening by deep learning in a multisite national screening programme: a prospective interventional cohort study. The Lancet. Digital health 4, e235-e244, doi:10.1016/s2589-7500(22)00017-6 (2022).

6 Jin, K. et al. Telemedicine screening of retinal diseases with a handheld portable non-mydriatic fundus camera. BMC ophthalmology 17, 89, doi:10.1186/s12886-017-0484-5 (2017).

7 Wang, S. et al. Human Visual System-Based Fundus Image Quality Assessment of Portable Fundus Camera Photographs. IEEE transactions on medical imaging 35, 1046-1055, doi:10.1109/tmi.2015.2506902 (2016).

8 Zhou, M., Jin, K., Wang, S., Ye, J. & Qian, D. Color Retinal Image Enhancement Based on Luminosity and Contrast Adjustment. IEEE transactions on bio-medical engineering 65, 521-527, doi:10.1109/tbme.2017.2700627 (2018).

9 Jin, K. et al. Computer-aided diagnosis based on enhancement of degraded fundus photographs. 96, e320-e326, doi:10.1111/aos.13573 (2018).

10 Shen, Y. et al. Domain-invariant interpretable fundus image quality assessment. Medical image analysis 61, 101654, doi:10.1016/j.media.2020.101654 (2020).

Follow the Topic

-

Scientific Data

A peer-reviewed, open-access journal for descriptions of datasets, and research that advances the sharing and reuse of scientific data.

Related Collections

With Collections, you can get published faster and increase your visibility.

Data for crop management

Publishing Model: Open Access

Deadline: Apr 17, 2026

Data to support drug discovery

Publishing Model: Open Access

Deadline: Apr 22, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in