Planck evidence for a closed Universe and a possible crisis for cosmology

Published in Astronomy

No matter how elegant, beautifully symmetric, or natural is your theory, experimental data will have the last word. The history of our understanding of the Universe makes no exception to this rule. The retrograde motion of the planets was fundamental to the conception of the Copernican model. Kepler’s discovery that the orbit of Mars was elliptical (and not perfectly circular as in the Copernican system), paved the way to Newton’s law of universal gravitation. The anomalous rate of precession of Mercury’s perihelion (respect to Newtonian gravity) provided a spectacular confirmation of General Relativity. And so on, the progress in our understanding of the Universe is built over unexpected anomalies.

Interestingly, in the past years, significant discordances have emerged on the values of cosmological parameters derived from different observables. Most notably, the value of the Hubble constant obtained from the measurements of the Cosmic Microwave Background anisotropies made by the Planck satellite is smaller by more than four standard deviations from what inferred from observations of Supernovae Type Ia, calibrated with Cepheid Variables. While this is a worrying sign for the theory, not all cosmologists are convinced that new physics (and not systematics) is at the origin of the discrepancy. This is mainly because the Planck preferred model is entirely consistent with Baryon Acoustic Oscillation data derived from recent galaxy surveys as BOSS. When two experiments over three are in agreement, there is, of course, a legitimate suspicion that the anomalous result comes from a still undetected systematic.

However, the concordance between Planck and BAO is obtained under the assumption of a specific cosmological model, the so-called Lambda-Cold Dark Matter (LCDM) model. The LCDM model (to which significantly contributed the Nobel prize winner of this year, Prof. James Peebles) has been hugely successful in explaining most of the recent experimental results as, for example, the peaks and dips in the angular spectrum of CMB anisotropies. The LCDM model is often regarded as “the standard model” of cosmology.

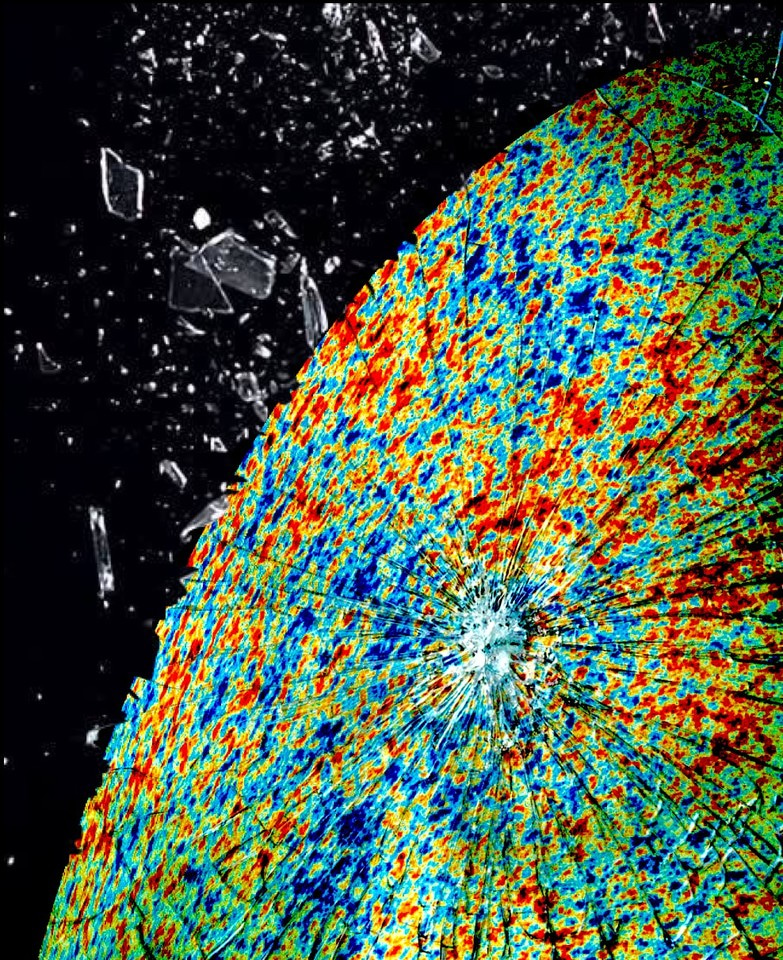

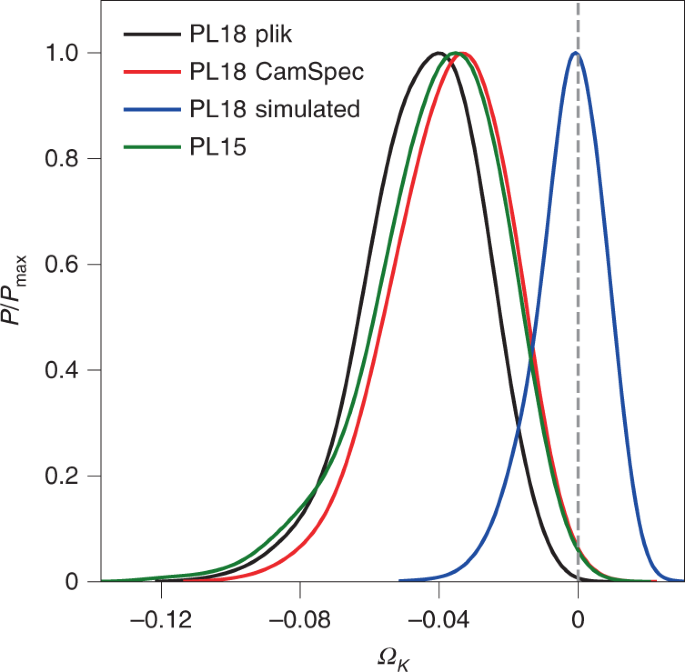

One crucial ingredient of the LCDM model is that the curvature of the universe is “flat”, i.e., that the spatial part of the metric is Euclidean. Flatness is indeed one of the major predictions of the inflationary theory that is also needed to solve some critical problems of Friedmann’s cosmology. Moreover, CMB experiments before Planck have all been in agreement with the prediction of flatness. And here comes the new exciting point: the recent Planck 2018 release, while significantly reducing the statistical errors on curvature, has found that the Universe is not flat at more than 99% c.l. According to Planck data, the Universe is nearly flat, just 4% “more curved” than what we thought to be. But this 4% is enough to introduce a dramatic tension with the BAO data and with all other current datasets. All BAO data are discordant with Planck at more than three standard deviations, cosmic shear data are also conflicting at the same level, and the value of the Hubble constant is now at more than five standard deviations away.

In practice, when the assumption of flatness is removed, a cosmological crisis emerges where disparate observed properties of the Universe appear to be mutually inconsistent. A possible solution to this problem is to raise “by hand” the expected signal of gravitational lensing in CMB data. There is, however, at the moment, no physical model that can do this, and this solution can be defined in one word: Epicycles.

Follow the Topic

-

Nature Astronomy

This journal welcomes research across astronomy, astrophysics and planetary science, with the aim of fostering closer interaction between the researchers in each of these areas.

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in