Planetary Health in the Age of Artificial Intelligence: A Structural–Ethical Inquiry into Sustainable AI in Healthcare

Published in Sustainability, Computational Sciences, and Public Health

From Medicine to Philosophy: The Beginning of a Question

My journey toward this paper began in the clinic. As a medical doctor, I was fascinated early in my studies by how differently health could be understood—sometimes as the absence of disease, sometimes as balance, sometimes as the flourishing of a whole person in relation to their environment. That diversity of meanings stayed with me, especially as I witnessed how health systems often reduced care to measurable outcomes and technologies.

During my time at the University of Vienna’s Department of Philosophy, where I helped organize a conference titled “AI and the Planet in Crisis.” It was at that event that Aimee van Wynsberghe presented her paper on the third wave of AI ethics and the structural turn. Their argument—that ethics must move beyond principles like fairness and transparency to examine the socio-technical and ecological structures sustaining AI—deeply resonated with me.

Listening to her, I began to see a bridge between two worlds I knew well: medicine and philosophy. Sustainability, which had become central in environmental and health discourses, could also serve as the link between planetary health and structural AI ethics. I realized that if AI was becoming integral to healthcare, then the ethics of AI could not remain detached from the ecological and systemic conditions of health itself. That realization became the seed of this paper.

Rethinking Health in a Time of Polycrisis

The writing began with an unease about the contradictions of our time. While healthcare systems aim to protect human well-being, they contribute significantly to greenhouse-gas emissions and resource depletion. Meanwhile, AI technologies—celebrated as tools for efficiency and progress—depend on energy-hungry computation and extractive supply chains. I wanted to ask: What does it mean to promote health when our tools of care harm the planet that sustains life?

The idea of planetary health provided the conceptual foundation to explore this question. It reframes human well-being as inseparable from ecological stability. Unlike global health, which focuses on cross-border disease control, planetary health emphasizes operating within planetary boundaries—the climatic and biological limits necessary for life to flourish.

This framework transformed my perspective on AI in medicine. It was no longer enough to evaluate AI as an instrument that improves diagnosis or efficiency. We also needed to examine its environmental cost, its labor implications, and the power structures embedded in its infrastructures.

From Public Health to Planetary Health

Tracing the history of health systems helped clarify this trajectory. Public health arose to manage local epidemics and sanitation; global health addressed inequalities between nations; One Health recognized the interconnectedness of humans, animals, and ecosystems. Planetary health extended these ideas by embedding them within ecological limits and justice concerns.

What distinguishes planetary health to me is its insistence that sustainability is not an optional goal but a moral and operational prerequisite for any healthcare system. This insight reframed my understanding of AI: if the health sector is responsible for planetary well-being, then the digital infrastructures supporting it must also meet ecological and ethical standards.

The Structural–Ethical Perspective

The encounter with van Wynsberghe and Bolte’s work gave me a vocabulary to articulate this insight. Their notion of a structural turn in AI ethics argues that ethical evaluation must include the material, political, and ecological systems in which technologies are embedded.

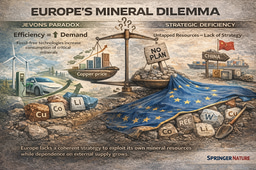

Applying that perspective to healthcare revealed that AI is not a neutral instrument but a structural actor—it shapes clinical priorities, redistributes resources, and influences who receives care and who does not. Moreover, its material existence—servers, data centers, rare-earth minerals—binds it to planetary processes.

This realization turned the paper into a dialogue between planetary health and sustainable AI. I began to see AI as both a potential solution and a source of unsustainability, demanding a more systemic and justice-oriented approach.

Between Promise and Paradox

AI offers extraordinary possibilities for sustainability in healthcare. Predictive models can forecast disease outbreaks and improve prevention. Smart hospital systems can reduce energy consumption. Circular supply chains, aided by AI analytics, can minimize medical waste.

Yet, behind these successes lie paradoxes. Training large models consumes massive electricity. Data centers rely on carbon-intensive grids. Rare minerals used in AI hardware are mined under exploitative conditions. The result is an ethical contradiction: we use energy-hungry algorithms to solve the consequences of an energy-hungry world.

This contradiction became the central ethical tension of the paper: Can AI genuinely advance planetary health without reproducing the very harms it aims to address?

Justice at the Core of Sustainability

To answer, I adopted what I called a structural–ethical approach to sustainable AI. It moves beyond counting emissions to ask whose environments, bodies, and futures bear the costs of AI innovation.

For example, telemedicine may reduce travel emissions, yet it increases digital energy use and may exclude populations without reliable internet access. Efficiency gains in one context may create new inequities in another. True sustainability, I argue, must therefore be justice-centered—addressing distributive, procedural, and recognition justice alike.

This means ensuring equitable access to low-energy AI tools, fair labor conditions in data supply chains, and participatory governance where communities have a voice in technological decisions that affect their health.

Governance for a Planetary Future

Sustainable AI cannot rely on voluntary ethics or corporate responsibility alone. It requires governance architectures that align innovation with ecological limits. Most current frameworks, including the EU AI Act, still prioritize innovation and safety over environmental impact.

Drawing on planetary health ethics, I proposed that AI governance incorporate lifecycle accountability—from resource extraction to e-waste disposal—and embed sustainability metrics similar to environmental, social, and governance (ESG) criteria. Moreover, governance must become participatory: clinicians, engineers, ethicists, and affected communities should co-shape how AI is deployed in healthcare.

Such reforms would turn sustainability from a compliance target into a normative lens guiding the design and regulation of digital health systems.

Writing Between Two Worlds

Writing this paper felt like moving between two intellectual homes. In medicine, I had learned to value precision, outcomes, and practicality. In philosophy, I learned to question assumptions, expose structures, and seek meaning. Bridging these traditions allowed me to treat AI not merely as a technical artifact but as part of the moral and ecological fabric of healthcare.

The process also brought a personal rea. I began to see the practice of medicine itself as an ecological act—one that should heal without harming. Planetary health and structural AI ethics together offer a way to reimagine what it means to care: not only for patients but for the planet that makes healing possible.

Looking Ahead

This research is only a beginning. Future work must develop lifecycle metrics for AI, justice-oriented governance models, and ways to embed planetary health principles directly into AI design.

Ultimately, the question that guided me as a physician remains the same as the one I now ask as a philosopher: How can care endure? To ensure that both humanity and the planet thrive, our technologies must learn to care, too—sustainably, justly, and within the limits of the world that gives them life.

Follow the Topic

-

AI & SOCIETY

This journal focuses on societal issues including the design, use, management, and policy of information, communications and new media technologies, with a particular emphasis on cultural, social, cognitive, economic, ethical, and philosophical implications.

What are SDG Topics?

An introduction to Sustainable Development Goals (SDGs) Topics and their role in highlighting sustainable development research.

Continue reading announcement

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in

The notion of AI as a structural actor, as opposed to a para-human agent, changes the conversation in a very meaningful way. We should worry less about whether AI will out-think humans and worry more about how to balance its benefits with how it (and the corporate structure behind it) affects the planet--and the (non) regulation of AI, at least here in the US, is certainly not centered on justice.