Temporal Dendritic Heterogeneity Incorporated with Spiking Neural Networks for Learning Multi-timescale Dynamics

Published in Computational Sciences, Mathematical & Computational Engineering Applications, and Mathematics

Dynamics Attributes of Spiking Neural Networks

Inspired by the intricate structures and functions observed in neural circuits of the brain, spiking neural networks (SNNs) have emerged as the third generation of artificial neural networks (ANNs). Research on SNNs has extensively considered biological phenomena such as neural dynamics, connection patterns, coding schemes, processing flows, and so forth. Owing to the dynamic attributes of SNNs, they are widely believed to have the capability of processing temporal information. However, in the recent year, the main trend of studies on SNNs focus on image recognition tasks, especially after the boost of accuracy by borrowing the backpropagation through time (BPTT) learning algorithm from the ANN domain. What kind of mechanisms contributing to the learning ability and exploit the rich dynamic properties of SNNs to satisfactorily solve complex temporal computing tasks remain largely unexplored.

Inspiration from the Brain

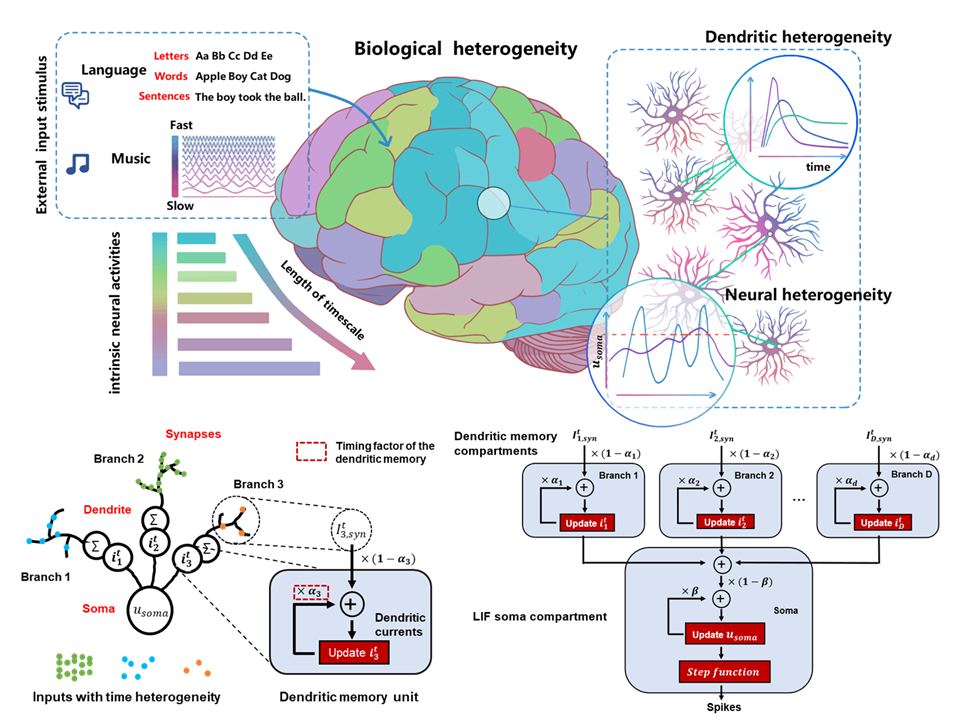

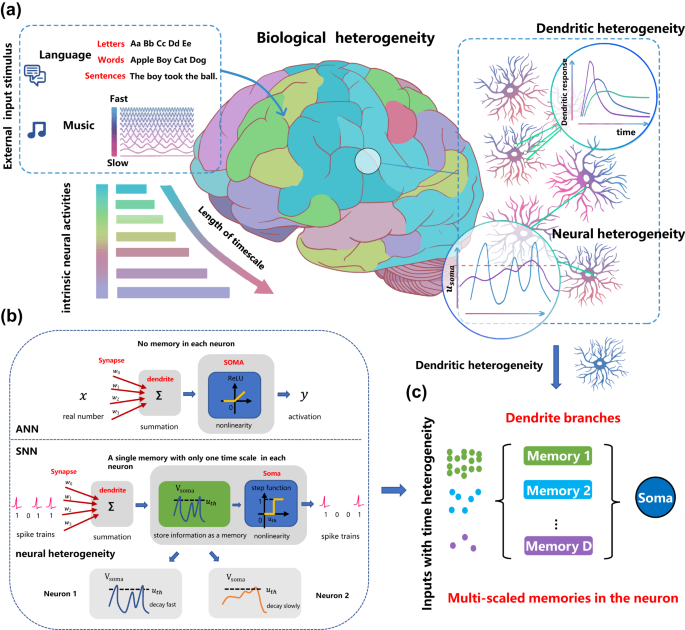

The brain adeptly handles complex temporal signals with variable timescales and high spectral richness. For example, the brain can easily recognize speakers who are speaking with different timescales such as fast or slow. In essence, neuroscientists have identified significant temporal heterogeneity in brain circuits, encompassing neural, dendritic, and synaptic heterogeneity. t seems believable that these kinds of heterogeneity are more than noises but promising to generate the capability of capturing and processing multi-timescale temporal features. Computational neuroscientists have paid attention to the temporal computing capabilities of dendrites inferred from many biophysical phenomena and proposed neuron models or fabricated dendrite-like nanoscale devices to mimic biological behaviors. The advanced computational functions suggested by biological dendrites including local nonlinear transformation, adjustment to synaptic learning rules, multiplexing different sources of neural signals and the generation of multi-timescale dynamics may benefit neural networks in machine learning.

The Gap between Theoretical Understanding and Practical Application

While biological observations offer valuable insights, their application to real-world complex temporal computing tasks performed with neural networks is challenging at the current stage This difficulty arises due to inappropriate abstraction, high computational complexity, and the lack of effective learning algorithms. In addition, most of existing SNNs for solving real-world temporal computing tasks adopt the simplified version of leaky integrate-and-fire (LIF) neurons, which cannot sufficiently exploit the rich temporal heterogeneity. Even though a few researchers have touched the neural heterogeneity by learning membrane and synaptic time constants, they ignored the dendritic heterogeneity which we consider of great importance. We found that only considering neural heterogeneity makes it hard to deliver satisfactory results when performing temporal computing tasks due to the insufficient multi-timescale neural dynamics. Currently, explicit and comprehensive studies on how to incorporate temporal dendritic heterogeneity into a general SNN model for effective application in real-world temporal computing tasks, and elucidating its working mechanisms, are lacking. In light of these limitations, our work focuses on the exploration of dendrite heterogeneity as a more effective and efficient alternative in practice.

The DH-SNN Model

To address these challenges, we propose a novel LIF neuron model with temporal dendritic heterogeneity that also covers neural heterogeneity, termed DH-LIF. Then, we extend the neuron model to the network level, termed DH-SNNs, which support both the networks with only feedforward connections (DH-SFNNs) and those with recurrent connections (DH-SRNNs). We derive the explicit form of the learning method for DH-SNNs based on the emerging high-performance BPTT algorithm for ordinary SNNs. By adaptively learning heterogeneous timing factors on different dendritic branches of the same neuron and on different neurons, DH-SNNs can generate multi-timescale temporal dynamics to capture features at different timescales. In order to reveal the underlying working mechanism, we elaborate a temporal spiking XOR problem and find that the inter-branch feature integration in a neuron, the inter-neuron feature integration in a recurrent layer, and the inter-layer feature integration in a network have similar and synergetic effects in capturing multi-timescale temporal features. On extensive temporal computing benchmarks for speech recognition (SHD & SSC), visual recognition ((P)S-MNIST), EEG signal recognition (DEAP), and robot place recognition, DH-SNNs achieve comprehensive performance benefits including the best reported accuracy along with promising robustness and generalization compared to ordinary SNNs. With an extra sparse restriction on dendritic connections, DH-SNNs present high model compactness and high execution efficiency on neuromorphic hardware. This work suggests that the temporal dendritic heterogeneity observed in the brain is a critical component in learning multi-timescale temporal dynamics, shedding light on a promising route for SNN modeling in performing complex temporal computing tasks.

In the Future

An interesting topic in future work is to improve the model itself. A possible way for model improvement is to build DH-SNNs based on more complicated spiking neuron models rather than the LIF one. For example, neuron models with more dendritic properties found in biological neurons seem promising. However, the naive imitation of biological neurons may not benefit the performance of neural networks in practical tasks but even be harmful under the current intelligence framework due to the complicated equations with massive hyper-parameters needed for describing dendritic behaviors. Careful abstraction of dendritic properties is crucial for success. Another potential direction is to explore a learnable dendritic connection pattern. In contrast to the fixed dendritic connection pattern in our modeling, biological neural networks exhibit evolving connections on dendrites. Drawing inspiration from this biological phenomenon, we can investigate the potential for adapting the connection pattern during the learning process. Moreover, optimization methods and benchmarking tasks will be critical for mining the potential of neuron models. While our current focus is on demonstrating the effectiveness of temporal dendritic heterogeneity, future enhancements could involve introducing convolutional layers and optimization techniques like activity normalization

There are many inherent constraints in biological neurons. However, our research prioritizes the effective integration of biological observations into computational models to address real-world computing tasks, rather than strictly adhering to all biological principles. The departures from biological fidelity are often necessary to prevent the degradation of model performance during training with complicated neural dynamics and biological details. It is very hard to balance the performance in practical tasks and the biological plausibility. Innovations in learning algorithms offer promising potential to realize this balance, which is an interesting topic for future work.

Follow the Topic

-

Nature Communications

An open access, multidisciplinary journal dedicated to publishing high-quality research in all areas of the biological, health, physical, chemical and Earth sciences.

Related Collections

With Collections, you can get published faster and increase your visibility.

Women's Health

Publishing Model: Hybrid

Deadline: Ongoing

Advances in neurodegenerative diseases

Publishing Model: Hybrid

Deadline: Mar 24, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in