The AI Physician's Assistant: Exploring ChatGPT in Breast Tumor Board

Published in Healthcare & Nursing

In the closing of 2022, excitement was high with the introduction of OpenAI's ChatGPT-3.5, a large language model (LLM) that piqued the interest of many, including the scientific community. Language has long been considered the key to human supremacy over ecosystems. Our ability to formulate ideas, share them, and collectively act on shared beliefs has allowed us to create complex societies and technologies (Yuval Noah Harari). Large language models’ abilities to generate and “understand” language are astounding, leading us to ponder on the extent of their abilities and limitations.

The clinical decision-making process undertaken at tumor boards presents one of the most formidable challenges in healthcare. A typical board consists of a diverse group of experts, including oncologists, surgeons, radiologists, and pathologists, who come together to review and discuss complex clinical cases. These meetings involve an in-depth exploration of patients' medical histories, diagnostic results, and an evaluation of potential treatment plans. We wondered whether ChatGPT could rise to the challenge in this complex decision-making task.

In our recent study1, we evaluated the performance of ChatGPT-3.5 on consecutive clinical cases presented at our institutional breast tumor board. The chatbot was tasked with summarizing the patient’s condition, making a recommendation, and explaining its reasoning (Figure 1).

Figure 1. The figure illustrates a scenario where a large language model supports a physician in everyday work. Reprinted with permission from Sorin et al.2 Large language models for oncological applications. Journal of Cancer Research and Clinical Oncology 2023

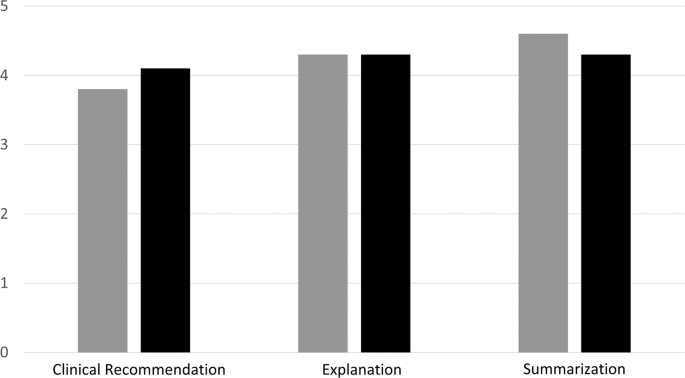

Remarkably, in 70% (7/10) of the cases, the chatbot's recommendations aligned with the decisions made by the human professionals on the tumor board. Moreover, it summarized complex clinical scenarios and provided detailed explanations for its conclusions. We had two radiologists grade ChatGPT’s performance across these three tasks, from 1 to 5. The grades provided by the first reviewer were 3.7, 4.3, and 4.6 for summarization, recommendation and explanation respectively, while the second reviewer’s mean grades were 4.3, 4.0, and 4.3, respectively.

While this study showcased some of the potential of ChatGPT-3.5, it also highlighted the technology’s limitations. Notably, the chatbot consistently overlooked the necessity for radiology consultation, even though radiologists play an important role in cancer diagnosis and treatment planning. Furthermore, in one instance, the AI neglected to include crucial HER2 FISH results. Mistakes of this sort could lead to significant missteps in treatment planning.

The possibility of LLMs generating incorrect or misleading information highlights the need for supervision and careful validation of these models’ outputs. This is even more critical in healthcare, where incorrect information could have grave implications on patient safety. There are also other limitations that have to be considered. These models, trained on vast datasets, might inherently carry biases present in the data they were trained on, potentially exacerbating healthcare disparities3.

Another significant concern is data security and privacy. Incorporating patient data into these models calls for robust data protection measures. The risk of adversarial cyber-attacks, where malicious parties manipulate data to produce harmful outcomes, is another significant concern that underlines the importance of adequate cybersecurity measures4. Finally, the question of legal responsibility and liability in cases where AI-driven decisions lead to negative outcomes warrant careful consideration, specifically in healthcare.

Meanwhile, OpenAI launched ChatGPT-4, an even larger and more advanced model. Intrigued, we put this newer version to the test. The results were even more promising. In our preliminary evaluation, ChatGPT-4’s recommendations aligned with our tumor board in 9 out of 10 cases.

Despite the remarkable performance of LLMs, it is crucial to maintain a balanced perspective, being aware of the technology’s limitations and capabilities, and to implement it carefully2. Our study was a mere proof-of-concept. More extensive research is warranted to fully comprehend the potential and shortcomings of the technology.

As the integration of AI into healthcare accelerates, it becomes increasingly important for clinicians to understand the benefits and drawbacks of such technology5. This is an exciting era, where we find ourselves at the intersection of AI and healthcare, navigating the delicate balance of integrating AI capabilities in healthcare, while ensuring patients’ safety.

References:

- Sorin V, Klang E, Sklair-Levy M, et al. Large language model (ChatGPT) as a support tool for breast tumor board. npj Breast Cancer 2023;9.

- Sorin V, Barash Y, Konen E, Klang E. Large language models for oncological applications. Journal of Cancer Research and Clinical Oncology 2023.

- Sorin V, Klang E. Artificial Intelligence and Health Care Disparities in Radiology. Radiology 2021;301:E443-E.

- Finlayson SG, Bowers JD, Ito J, Zittrain JL, Beam AL, Kohane IS. Adversarial attacks on medical machine learning. Science 2019;363:1287-9.

- Rajpurkar P, Drazen JM, Kohane IS, Leong T-Y, Lungren MP. The Current and Future State of AI Interpretation of Medical Images. New England Journal of Medicine 2023;388:1981-90.

Follow the Topic

-

npj Breast Cancer

This journal publishes original research articles, reviews, brief communications, matters arising, meeting reports and hypothesis generating observations which could be unexplained or preliminary findings from experiments, novel ideas or the framing of new questions that need to be solved.

Related Collections

With Collections, you can get published faster and increase your visibility.

Molecular Tumor Board in Breast Cancer

Publishing Model: Open Access

Deadline: Jul 22, 2026

Rare breast cancer subtypes

Publishing Model: Open Access

Deadline: Mar 24, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in