What’s in a Name? The Perils of Using Name-Based Recognition Software

Published in Social Sciences

When we started, we thought this data quality check might end up as a paragraph in some methods section or appendix – we certainly did not expect it to become its own paper in Nature Human Behavior. And yet it did.

We wanted to study demographics and scientific authorship, how publications relate to the people who write them. Publication data generally doesn’t come with demographic variables, so most research on this topic uses automated imputation tools to guess gender and race/ethnicity from names of authors, which are readily available. Because we were also interested in other things (e.g. sexuality, disability, class background), we had to run a survey that asked authors their demographics directly. Others before us, especially trans scholars, had written about how that was a more ethical and valid measure of gender than imputation. Now with nearly 20,000 survey responses covering a wide range of author characteristics, we had an opportunity to test the validity of name based imputation tools like no one ever had.

These tools are hugely popular, not just in bibliometrics research, but in diversity efforts, corporate marketing, and political campaigning. Really, they’re deployed anywhere people have large amounts of data with names but not traditional demographic variables like gender and race, including social media platforms, newspaper archives, and campaign contribution lists. So getting the use of these tools right is important!

Using our survey of 19,924 authors of social science journal articles, we examined gender and racial misclassification by name-based demographic inference algorithms in a study out now in Nature Human Behavior. Because of our detailed survey data where authors self-identified their demographic characteristics, we were able to look at misclassification in a trans- and nonbinary-inclusive way, as well as differences in misclassification by nationality, sexuality, disability, parental education, and name-changes.

We found significant differences in the error rate by subgroup, with some subgroups such as Chinese women being misgendered 43% of the time, while Black people from highly educated families had their race misclassified 80% of the time. Disparities in error rates highlight fundamental problems with the information content of names, rooted in the complex cultural processes of naming that vary both across and within demographic groups. Therefore, name-based ascription algorithms should be used with caution, and researchers should be aware of the limitations and potential biases of such tools.

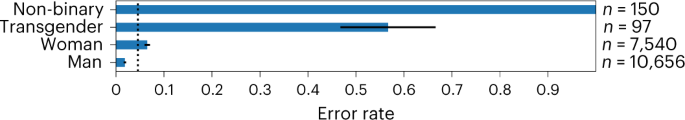

We found that gender recognition algorithms are prone to misgendering individuals, particularly women, trans people, nonbinary people, people with disabilities, and Asian people. Our study found an overall error rate of 4.6% in gender prediction using the most popular tool, genderize.io. But those low error rates mask important heterogeneity. Women are misgendered 3.5 times more often than men, and nonbinary people are always misgendered.

We also conducted an analysis of race/ethnicity and found that the problem of racial misclassification remains dramatic even by the most generous standards. All algorithms used in the study have similar results, with overall accuracies ranging from 47% to 86% when predicting broad US Census racial/ethnic categories of social science authors from their names. Black, Middle Eastern and North African, and Filipino people were misclassified between 55% and 80% of the time, while White, Asian, Chinese, Vietnamese, and Korean people were misclassified less than 10% of the time. We also found that there is variation in racial misclassification rates by sexuality and name changes, with the factors driving these errors differing for each group.

We explore how naming and demographic membership affect each other in complex ways, specifically focusing on name-based demographic ascription algorithms. The accuracy of these algorithms is approaching the limit beyond which additional reference data or more advanced modeling cannot improve performance. One major issue is that certain names are low-information, resulting in unequal effects across groups. For instance, Chinese, Vietnamese, and Korean people are misgendered more than Indian, Japanese, and other Asian-origin people due to differences in name representation in English databases. Naming systems common in Spanish carry more gender information into English databases and analyses, leading to a reduction of misgendering. Names that are rare, commonly given to multiple groups, or have lost demographic correlations in translation to Roman characters, have unequal effects on different groups. The unequal demographic information content of names that leads to heterogeneity in error rates is not only a language problem but also a sociocultural one. The correlations among demographics in the social world can pose significant confounding challenges. The heterogeneity in error rates with name-based demographic ascription can pose serious challenges to researchers trying to study demographic differences.

Name-based demographic inference has both advantages and limitations, and researchers should be aware of its potential ethical and validity concerns. To use this method carefully and responsibly to avoid ethical issues and inaccuracies, we recommend five principles in the paper for conducting name-based demographic inference, including critical refusal in cases where name-based demographic inference may not be justified, shaping inference to be specific to the researcher's population of interest using domain expertise, and using name-based demographic estimates better in aggregate measures than individual classifications. Following these principles will lead to more accurate and ethical results when using name-based demographic inference. These findings also emphasize the need for continued improvement and evaluation of name imputation algorithms to ensure accurate and equitable results, and we include a number of recommendations for algorithm developers, as well.

The use of computer algorithms in important decisions such as job applications, social media profiling, market research, and diversity evaluations, not to mention scientific research can lead to biased outcomes, unequal distribution of harm, and incorrect inferences. The existence of incomplete demographic data led to the rise of imputation algorithms, but examining these tools carefully is essential for ensuring responsible, valid, and just methodologies.

Follow the Topic

-

Nature Human Behaviour

Drawing from a broad spectrum of social, biological, health, and physical science disciplines, this journal publishes research of outstanding significance into any aspect of individual or collective human behaviour.

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in