"Where" in the brain enables "who": spatial constraints on human face recognition

Published in Social Sciences

Humans are incredibly skilled at identifying faces: although each face contains the same features in roughly the same locations, we are finely attuned to the minute differences between individuals. We recognize some thousands of unique people over the course of our lifetime, and do so with considerably greater ease than other visual object categories, like individual cars or plants. This is perhaps unsurprising given the importance of faces in human interactions and communication, which enable not only socialization but also broad social learning. Indeed, decades of research have examined face recognition behavior, and how it might be unique compared to other forms of visual recognition.

For the last 50 years, researchers have noted that we are much worse at recognizing faces rotated upside-down, and that this impairment is stronger for faces than for other categories of objects. This has been conceptualized as a breakdown of holistic face processing – the idea that rather than recognizing people based on individual features of their faces such as eyes, noses, and mouths, we recognize faces as a whole. Indeed, we’re far better at distinguishing whole faces than individual features. While the idea of holistic processing is incredibly influential in behavioral research of human perception, we have, until now, very little insight as to how this is actually achieved by the human brain.

The core visual recognition system in the human brain is hierarchical – visual information is first processed in low-level visual areas of the brain, which respond to simple features, like orientation and contrast, and ends up in high-level visual regions, which respond to complex visual objects, places, and, of course, faces. In low-level visual regions, neurons respond to visual inputs only in highly localized regions of the visual field. That is, each neuron sees the world through a small aperture, which scientists call a receptive field. While each neuron has a receptive field that responds to input at a different location in the visual field, the receptive field aperture of neurons in low-level regions is small, so each neuron “sees” only a small portion of the world at a time, and does not respond when inputs fall outside of its receptive field. In contrast, it’s generally thought that neurons in high-level regions have large receptive field apertures and are thus not particularly selective to visual space, instead responding to objects at many locations – after all, we need to recognize people no matter where in our field-of-view they appear.

We started this project from a basic question: is there spatial processing by receptive fields in high-level visual areas, and if so, what is it used for? Is this just a vestigial property of neural responses, or does it actively enable and constrain how we recognize faces? Because neurons with similar receptive fields are clustered together in the brain, we can use functional magnetic resonance imaging (fMRI) to measure population receptive fields (pRFs) throughout the brains of human participants, looking both across and within individual visual regions. Using this model, in the current project we sought for the first time to link spatial processing in high-level visual regions to active recognition behavior. We were particularly interested in the behavioral face inversion effect described above, which psychologists have described as a failure of holistic processing, or integrating information across multiple features of a face.

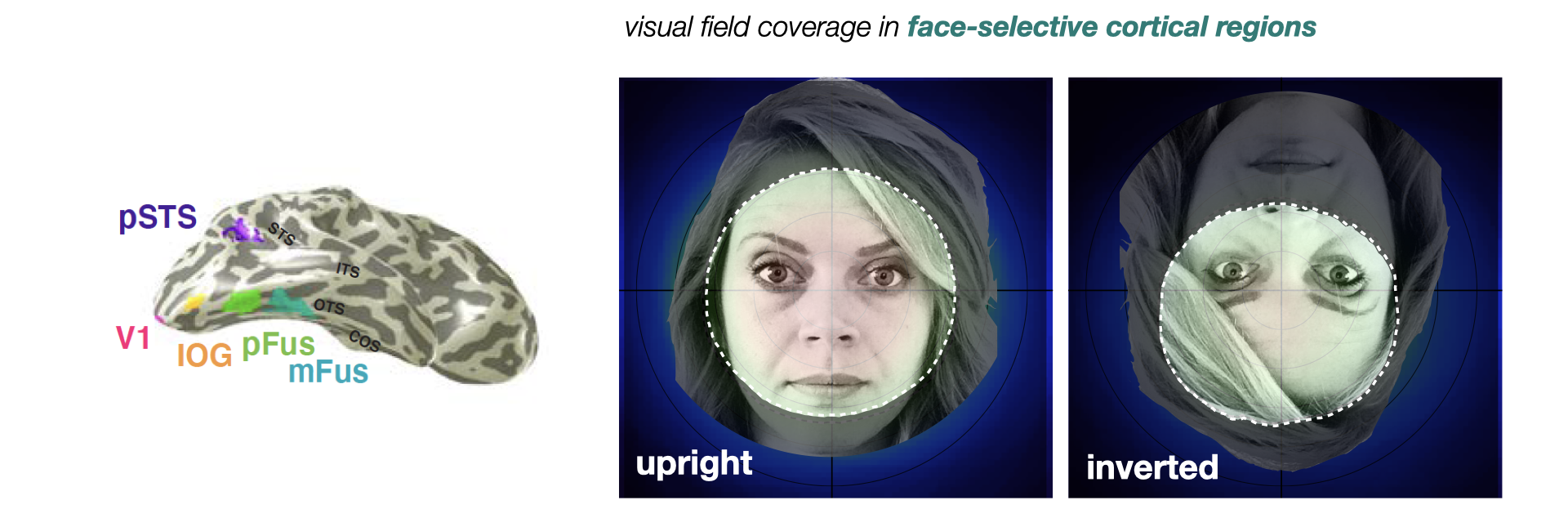

To answer this question, we performed a simple experiment: we mapped pRFs in face-selective regions, showing face stimuli at randomized positions over time to tile the visual field; doing so allowed us to use a computational pRF model to derive the region in visual space that drives neural activity in each fMRI voxel (3D pixel). To estimate how spatial processing may change when faces are inverted, we used both upright and inverted faces in mapping, and estimated the pRF model independently for each face orientation. We reasoned that if inverting a face disrupts holistic processing across facial features, then pRFs mapped with inverted faces should be altered relative to mapping with upright faces. Indeed, we found this to be the case: pRFs in several distinct face-selective regions – specifically, the two located within a specific fold of the brain called the fusiform gyrus – were smaller in span and shifted downward with face inversion. Even when considering the processing power afforded by all of the neurons in these face-selective regions, we found profound differences in the coverage of visual space when participants saw upright versus inverted faces. When we view upright faces, neurons in these regions “see” a large portion of the visual field, centered where we focus our gaze; when faces are inverted, these same regions “see” a much smaller portion of the visual field, and this coverage is shifted downward below our gaze (see Figure below). While the former appears to adaptively cover – and thus process – all of the features of an upright face, the latter coverage would only span a limited portion of an inverted face, allowing less integration of information across features. Interestingly, this change does not seem to depend on the active task of recognizing a face, but rather happens anytime we see an inverted face. This may be shaped by our extensive experience with upright-oriented faces throughout the lifespan.

To us, these findings suggested that the reason we struggle to recognize faces when they’re inverted may be this shift in how face-selective regions sample visual space, and which features of inverted faces subsequently aren’t “seen” by face-selective neurons. Intriguingly, this hypothesis suggests that we may be able to improve recognition of inverted faces by positioning them slightly lower and to the left of participants’ center of gaze, at a position that best matched where inverted-mapped pRFs were shifted.

We used simulation of our neural data to predict the location in space where the features of an inverted face would best overlap the visual field coverage by pRFs for inverted faces. Then, in a separate behavioral experiment outside of the scanner, we measured our participants’ ability to recognize upright and inverted faces at the “optimal” location suggested by the data, slightly lower-left of center. We found that indeed, our participants were less impaired in recognizing inverted faces at the neurally-predicted location. While the task of active recognition undoubtedly involves additional complex neural function, we now think that spatial processing by these regions serves as a kind of hardware on which behavior may operate.

This series of experiments and simulations provides three key insights into the human brain. First, we experimentally and computationally explain the influential but neurally elusive concept of holistic face processing, demonstrating that pRFs in face-selective regions actively enable this behavior. We also demonstrate for the first time the functional utility of spatial information in the final stages of the visual hierarchy for recognition, which is traditionally thought to determine “what” we’re seeing, rather than “where.” Finally, we propose that the population receptive field model, which can precisely quantify processing across the brain, can be a powerful tool for researchers across domains to connect neural computations to behavior.

Human face recognition is a remarkable feat: we distinguish thousands of individuals based on millimeter-scale differences in facial features, and do so effortlessly and efficiently. The current study opens the door to understanding this incredibly complex behavior through the computations done by populations of neurons; it suggests that even basic neural properties, like the region that a given neuron “sees,” can have a profound impact in how we perceive the world.

Banner image: Chuck Close, 86th Street Subway Station Portraits: "Kara Walker". Photo by the New York Metropolitan Transit Authority, licensed for reuse under Creative Commons.

Follow the Topic

-

Nature Communications

An open access, multidisciplinary journal dedicated to publishing high-quality research in all areas of the biological, health, physical, chemical and Earth sciences.

Related Collections

With Collections, you can get published faster and increase your visibility.

Women's Health

Publishing Model: Hybrid

Deadline: Ongoing

Advances in neurodegenerative diseases

Publishing Model: Hybrid

Deadline: Mar 24, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in