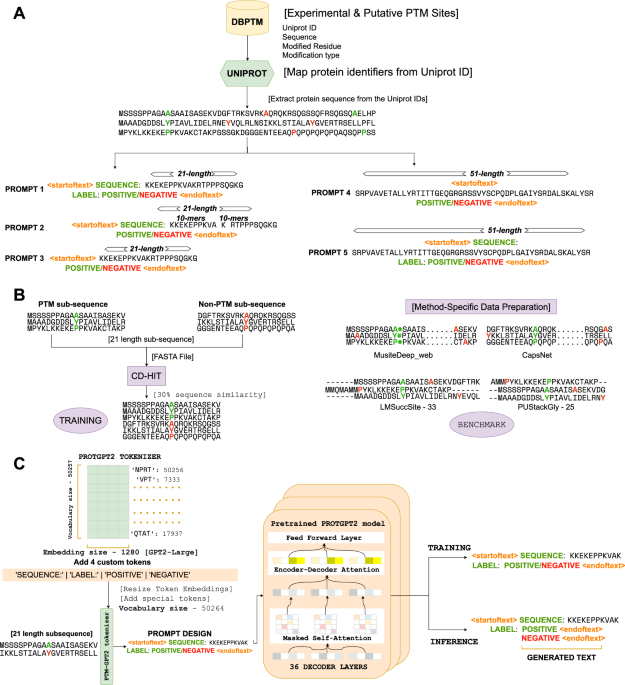

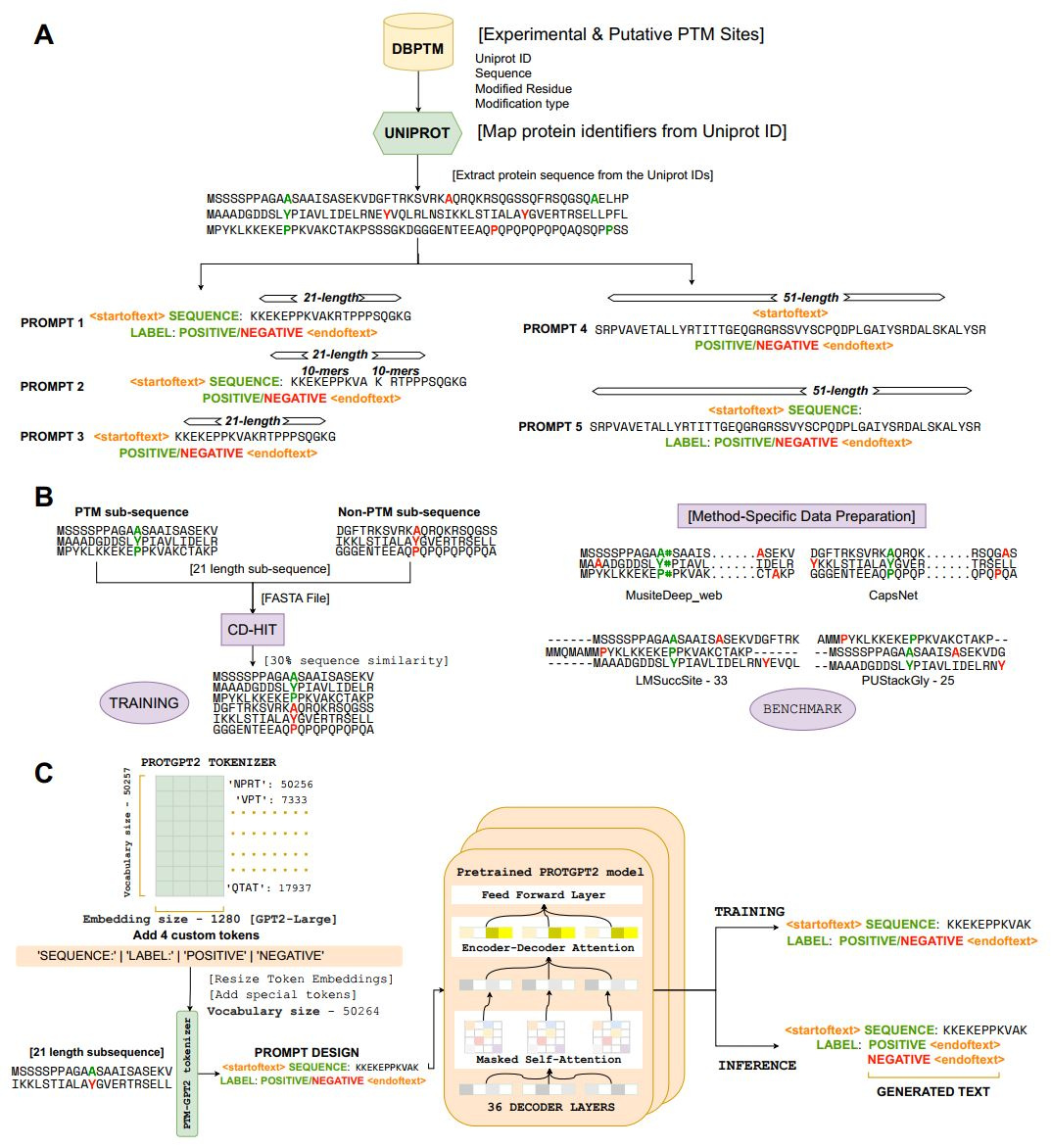

PTMGPT2: An Interpretable Protein Language Model for Enhanced Post-Translational Modification Prediction

PTMGPT2 uses GPT-based architecture and prompt-based fine-tuning to predict post-translational modifications. It outperforms existing methods across 19 PTM types, offering interpretability and mutation analysis. This advances the understanding of protein function and disease research.

Published in Cell & Molecular Biology and Computational Sciences

Follow the Topic

Protein Biochemistry

Life Sciences > Biological Sciences > Molecular Biology > Protein Biochemistry

Artificial Intelligence

Mathematics and Computing > Computer Science > Artificial Intelligence

-

Nature Communications

An open access, multidisciplinary journal dedicated to publishing high-quality research in all areas of the biological, health, physical, chemical and Earth sciences.

Related Collections

With Collections, you can get published faster and increase your visibility.

Women's Health

A selection of recent articles that highlight issues relevant to the treatment of neurological and psychiatric disorders in women.

Publishing Model: Hybrid

Deadline: Ongoing

Advances in neurodegenerative diseases

This Collection aims to bring together research from various domains related to neurodegenerative conditions, encompassing novel insights into disease pathophysiology, diagnostics, therapeutic developments, and care strategies. We welcome the submission of all papers relevant to advances in neurodegenerative disease.

Publishing Model: Hybrid

Deadline: Mar 24, 2026

Please sign in or register for FREE

If you are a registered user on Research Communities by Springer Nature, please sign in